Gender Representation in AI – Part 1: Utilizing StyleGAN to Explore Gender Directions in Face Image Editing

Artificially enhancing face images is all the rage

What can AI contribute?

In recent years, image filters have become wildly popular on social media. These filters let anyone adjust their face and the surroundings in different ways, leading to entertaining results. Often, filters enhance facial features that seem to match a certain beauty standard. As AI experts, we asked ourselves what is possible to achieve in the topic of face representations using our tools. One issue that sparked our interest is gender representations. We were curious: how does the AI represent gender differences when creating these images? And on top of that: can we generate gender-neutral versions of existing face images?

Using StyleGAN on existing images

When thinking about what existing images to explore, we were curious to see how our own faces would be edited. Additionally, we decided to use several celebrities as inputs – after all, wouldn’t it be intriguing to observe world-famous faces morphed into different genders?

Currently, we often see text-prompt-based image generation models like DALL-E in the center of public discourse. Yet, the AI-driven creation of photo-realistic face images has long been a focus of researchers due to the apparent challenge of creating natural-looking face images. Searching for suitable AI models to approach our idea, we chose the StyleGAN architectures that are well known for generating realistic face images.

Adjusting facial features using StyleGAN

One crucial aspect of this AI’s architecture is the use of a so-called latent space from which we sample the inputs of the neural network. You can picture this latent space as a map on which every possible artificial face has a defined coordinate. Usually, we would just throw a dart at this map and be happy about the AI producing a realistic image. But as it turns out, this latent space allows us to explore various aspects of artificial face generation. When you move from one face’s location on that map to another face’s location, you can generate mixtures of the two faces. And as you move in any arbitrary direction, you will see random changes in the generated face image.

This makes the StyleGAN architecture a promising approach for exploring gender representation in AI.

Can we isolate a gender direction?

So, are there directions that allow us to change certain aspects of the generated image? Could a gender-neutral representation of a face be approached this way? Pre-existing works have found semantically interesting directions, yielding fascinating results. One of those directions can alter a generated face image to have a more feminine or masculine appearance. This lets us explore gender representation in images.

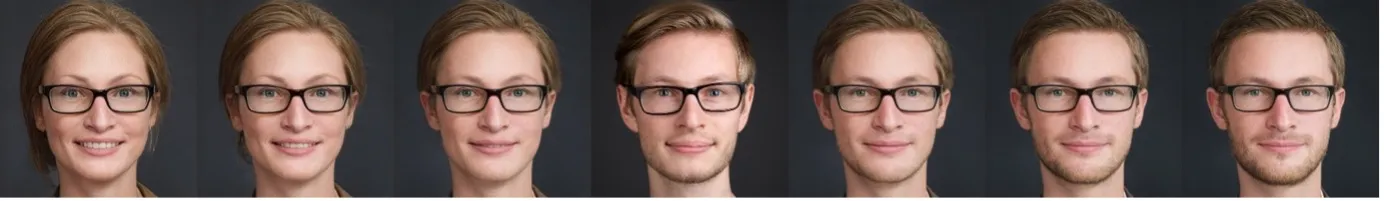

The approach we took for this article was to generate multiple images by making small steps in each gender’s direction. That way, we can compare various versions of the faces, and the reader can, for example, decide which image comes closest to a gender-neutral face. It also allows us to examine the changes more clearly and look for unwanted characteristics in the edited versions.

Introducing our own faces to the AI

The described method can be utilized to alter any face generated by the AI towards a more feminine or masculine version. However, a crucial challenge remains: Since we want to use our own images as a starting point, we must be able to obtain the latent coordinate (in our analogy, the correct place on the map) for a given face image. Sounds easy at first, but the used StyleGAN architecture only allows us to go one way, from latent coordinate to generated image, not the other way around. Thankfully, other researchers have explored this very problem. Our approach thus heavily builds on the python notebook found here. The researchers built another “encoder”-AI that takes a face image as input and finds its corresponding coordinate in the latent space.

And with that, we finally have all parts necessary to realize our goal: exploring different gender representations using an AI. In the photo sequences below, the center image is the original input image. Towards the left, the generated faces appear more female; towards the right, they seem more male. Without further ado, we present the AI-generated images of our experiment:

Results: photo series from female to male

Unintended biases

After finding the corresponding images in the latent space, we generated artificial versions of the faces. We then moved them along the chosen gender direction, creating “feminized” and “masculinized” faces. Looking at the results, we see some unexpected behavior in the AI: it seems to recreate classic gender stereotypes.

Big smiles vs. thick eyebrows

Whenever we edited an image to look more feminine, we gradually see an opening mouth with a stronger smile and vice versa. Likewise, eyes grow larger and wide open in the female direction. The Drake and Kim Kardashian examples illustrate a visible change in skin tone from darker to lighter when moving along the series from feminine to masculine. The chosen gender direction appears to edit out curls in the female direction (as opposed to the male direction), as exemplified by the examples of Marylin Monroe and blog co-author Isabel Hermes. We also asked ourselves whether the lack of hair extension in Drake’s female direction would be remedied if we extended his photo series. Examining the overall extremes, eyebrows are thinned out and arched on the female and straighter and thicker on the male side. Eye and lip makeup increase heavily on faces that move in the female direction, making the area surrounding the eyes darker and thinning out eyebrows. This may be why we perceived the male versions we generated to look more natural than the female versions.

Finally, we would like to challenge you, as the reader, to examine the photo series above closely. Try to decide which image you perceive as gender-neutral, i.e., as much male as female. What made you choose that image? Did any of the stereotypical features described above impact your perception?

A natural question that arises from image series like the ones generated for this article is whether there is a risk that the AI reinforces current gender stereotypes.

Is the AI to blame for recreating stereotypes?

Given that the adjusted images recreate certain gender stereotypes like a more pronounced smile in female images, a possible conclusion could be that the AI was trained on a biased dataset. And indeed, to train the underlying StyleGAN, image data from Flickr was used that inherits the biases from the website. However, the main goal of this training was to create realistic images of faces. And while the results might not always look as we expect or want, we would argue that the AI did precisely that in all our tests.

To alter the images, however, we used the beforementioned latent direction. In general, those latent directions rarely change only a single aspect of the created image. Instead, like walking in a random direction on our latent map, many elements of the generated face usually get changed simultaneously. Identifying a direction that alters only a single aspect of a generated image is anything but trivial. For our experiment, the chosen direction was created primarily for research purposes without accounting for said biases. It can therefore introduce unwanted artifacts in the images alongside the intended alterations. Yet it is reasonable to assume that a latent direction exists that allows us to alter the gender of a face created by the StyleGAN without affecting other facial features.

Overall, the implementations we build upon use different AIs and datasets, and therefore the complex interplay of those systems doesn’t allow us to identify the AI as a single source for these issues. Nevertheless, our observations suggest that doing due diligence to ensure the representation of different ethnic backgrounds and avoid biases in creating datasets is paramount.

Abb. 7: Picture from “A Sex Difference in Facial Contrast and its Exaggeration by Cosmetics” by Richard Russel

Subconscious bias: looking at ourselves

A study by Richard Russel deals with human perception of gender in faces. Ask yourself, which gender would you intuitively assign to the two images above? It turns out that most people perceive the left person as male and the right person as female. Look again. What separates the faces? There is no difference in facial structure. The only difference is darker eye and mouth regions. It becomes apparent that increased contrast is enough to influence our perception. Suppose our opinion on gender can be swayed by applying “cosmetics” to a face. In that case, we must question our human understanding of gender representations and whether they are simply products of our life-long exposure to stereotypical imagery. The author refers to this as the “Illusion of Sex”.

This bias relates to the selection of latent “gender” dimension: To find the latent dimension that changes the perceived gender of a face, StyleGAN-generated images were divided into groups according to their appearance. While this was implemented based on yet another AI, human bias in gender perception might well have impacted this process and have leaked through to the image rows illustrated above.

Conclusion

Moving beyond the gender binary with StyleGANs

While a StyleGAN might not reinforce gender-related bias in and of itself, people still subconsciously harbor gender stereotypes. Gender bias is not limited to images – researchers have found the ubiquity of female voice assistants reason enough to create a new voice assistant that is neither male nor female: GenderLess Voice.

One example of a recent societal shift is the debate over gender; rather than binary, gender may be better represented as a spectrum. The idea is that there is biological gender and social gender. Being included in society as who they are is essential for somebody who identifies with a gender that differs from that they were born with.

A question we, as a society, must stay wary of is whether the field of AI is at risk of discriminating against those beyond the assigned gender binary. The fact is that in AI research, gender is often represented as binary. Pictures fed into algorithms to train them are either labeled as male or female. Gender recognition systems based on deterministic gender-matching may also cause direct harm by mislabelling members of the LGBTQIA+ community. Currently, additional gender labels have yet to be included in ML research. Rather than representing gender as a binary variable, it could be coded as a spectrum.

Exploring female to male gender representations

We used StyleGANs to explore how AI represents gender differences. Specifically, we used a gender direction in the latent space. Researchers determined this direction to display male and female gender. We saw that the generated images replicated common gender stereotypes – women smile more, have bigger eyes, longer hair, and wear heavy makeup – but importantly, we could not conclude that the StyleGAN model alone propagates this bias. Firstly, StyleGANs were created primarily to generate photo-realistic face images, not to alter the facial features of existing photos at will. Secondly, since the latent direction we used was created without correcting for biases in the StyleGANs training data, we see a correlation between stereotypical features and gender.

Next steps and gender neutrality

We asked ourselves which faces we perceived as gender neutral among the image sequences we generated. For original images of men, we had to look towards the artificially generated female direction and vice versa. This was a subjective choice. We see it as a logical next step to try to automate the generation of gender-neutral versions of face images to explore further the possibilities of AI in the topic of gender and society. For this, we would first have to classify the gender of the face to be edited and then move towards the opposite gender to the point where the classifier can no longer assign an unambiguous label. Therefore, interested readers will be able to follow the continuation of our journey in a second blog article in the coming time.

If you are interested in our technical implementation for this article, you can find the code here and try it out with your own images.

Resources

Photo Credits

AdobeStock 210526825 – Wayhome Studio

AdobeStock 243124072 – Damir Khabirov

AdobeStock 387860637 – insta_photos

AdobeStock 395297652 – Nattakorn

AdobeStock 480057743 – Chris

AdobeStock 573362719 – Xavier Lorenzo

AdobeStock 575284046 – Jose Calsina