Text classification is one of the most common applications of natural language processing (NLP). It is the task of assigning a set of predefined categories to a text snippet. Depending on the type of problem, the text snippet could be a sentence, a paragraph, or even a whole document. There are many potential real-world applications for text classification, but among the most common ones are sentiment analysis, topic modeling and intent, spam, and hate speech detection.

The standard approach to text classification is training a classifier in a supervised regime. To do so, one needs pairs of text and associated categories (aka labels) from the domain of interest as training data. Then, any classifier (e.g., a neural network) can learn a mapping function from the text to the most likely category. While this approach can work quite well for many settings, its feasibility highly depends on the availability of those hand-labeled pairs of training data.

Though pre-trained language models like BERT can reduce the amount of data needed, it does not make it obsolete altogether. Therefore, for real-world applications, data availability remains the biggest hurdle.

Zero-Shot Learning

Though there are various definitions of zero-shot learning1, it can broadly speaking be defined as a regime in which a model solves a task it was not explicitly trained on before.

It is important to understand, that a “task” can be defined in both a broader and a narrower sense: For example, the authors of GPT-2 showed that a model trained on language generation can be applied to entirely new downstream tasks like machine translation2. At the same time, a narrower definition of task would be to recognize previously unseen categories in images as shown in the OpenAI CLIP paper3.

But what all these approaches have in common is the idea of extrapolation of learned concepts beyond the training regime. A powerful concept, because it disentangles the solvability of a task from the availability of (labeled) training data.

Zero-Shot Learning for Text Classification

Solving text classification tasks with zero-shot learning can serve as a good example of how to apply the extrapolation of learned concepts beyond the training regime. One way to do this is using natural language inference (NLI) as proposed by Yin et al. (2019)4. There are other approaches as well like the calculation of distances between text embeddings or formulating the problem as a cloze

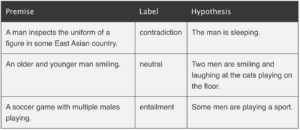

In NLI the task is to determine whether a hypothesis is true (entailment), false (contradiction), or undetermined (neutral) given a premise5. A typical NLI dataset consists of sentence pairs with associated labels in the following form:

Examples from http://nlpprogress.com/english/natural_language_inference.html

Yin et al. (2019) proposed to use large language models like BERT trained on NLI datasets and exploit their language understanding capabilities for zero-shot text classification. This can be done by taking the text of interest as the premise and formulating one hypothesis for each potential category by using a so-called hypothesis template. Then, we let the NLI model predict whether the premise entails the hypothesis. Finally, the predicted probability of entailment can be interpreted as the probability of the label.

Zero-Shot Text Classification with Hugging Face 🤗

Let’s explore the above-formulated idea in more detail using the excellent Hugging Face implementation for zero-shot text classification.

We are interested in classifying the sentence below into pre-defined topics:

topics = ['Web', 'Panorama', 'International', 'Wirtschaft', 'Sport', 'Inland', 'Etat', 'Wissenschaft', 'Kultur']

test_txt = 'Eintracht Frankfurt gewinnt die Europa League nach 6:5-Erfolg im Elfmeterschießen gegen die Glasgow Rangers'Thanks to the 🤗 pipeline abstraction, we do not need to define the prediction task ourselves. We just need to instantiate a pipeline and define the task as zero-shot-text-classification. The pipeline will take care of formulating the premise and hypothesis as well as deal with the logits and probabilities from the model.

As written above, we need a language model that was pre-trained on an NLI task. The default model for zero-shot text classification in 🤗 is bart-large-mnli. BART is a transformer encoder-decoder for sequence-2-sequence modeling with a bidirectional (BERT-like) encoder and an autoregressive (GPT-like) decoder6. The mnli suffix means that BART was then further fine-tuned on the MultiNLI dataset7.

But since we are using German sentences and BART is English-only, we need to replace the default model with a custom one. Thanks to the 🤗 model hub, finding a suitable candidate is quite easy. In our case, mDeBERTa-v3-base-xnli-multilingual-nli-2mil7 is such a candidate. Let’s decrypt the name shortly for a better understanding: it is a multilanguage version of DeBERTa-v3-base (which is itself an improved version of BERT/RoBERTa8) that was then fine-tuned on two cross-lingual NLI datasets (XNLI8 and multilingual-NLI-26lang10).

With the correct task and the correct model, we can now instantiate the pipeline:

from transformers import pipeline

model = 'MoritzLaurer/mDeBERTa-v3-base-xnli-multilingual-nli-2mil7'

pipe = pipeline(task='zero-shot-classification', model=model, tokenizer=model)Next, we call the pipeline to predict the most likely category of our text given the candidates. But as a final step, we need to replace the default hypothesis template as well. This is necessary since the default is again in English. We, therefore, define the template as 'Das Thema is {}'. Note that, {} is a placeholder for the previously defined topic candidates. You can define any template you like as long as it contains a placeholder for the candidates:

template_de = 'Das Thema ist {}'

prediction = pipe(test_txt, topics, hypothesis_template=template_de)Finally, we can assess the prediction from the pipeline. The code below will output the three most likely topics together with their predicted probabilities:

print(f'Zero-shot prediction for: \n {prediction["sequence"]}')

top_3 = zip(prediction['labels'][0:3], prediction['scores'][0:3])

for label, score in top_3:

print(f'{label} - {score:.2%}')Zero-shot prediction for:

Eintracht Frankfurt gewinnt die Europa League nach 6:5-Erfolg im Elfmeterschießen gegen die Glasgow Rangers

Sport - 77.41%

International - 15.69%

Inland - 5.29%As one can see, the zero-shot model produces a reasonable result with “Sport” being the most likely topic followed by “International” and “Inland”.

Below are a few more examples from other categories. Like before, the results are overall quite reasonable. Note how for the second text the model predicts an unexpectedly low probability of “Kultur”.

further_examples = ['Verbraucher halten sich wegen steigender Zinsen und Inflation beim Immobilienkauf zurück',

'„Die bitteren Tränen der Petra von Kant“ von 1972 geschlechtsumgewandelt und neu verfilmt',

'Eine 541 Millionen Jahre alte fossile Alge weist erstaunliche Ähnlichkeit zu noch heute existierenden Vertretern auf']

for txt in further_examples:

prediction = pipe(txt, topics, hypothesis_template=template_de)

print(f'Zero-shot prediction for: \n {prediction["sequence"]}')

top_3 = zip(prediction['labels'][0:3], prediction['scores'][0:3])

for label, score in top_3:

print(f'{label} - {score:.2%}')Zero-shot prediction for:

Verbraucher halten sich wegen steigender Zinsen und Inflation beim Immobilienkauf zurück

Wirtschaft - 96.11%

Inland - 1.69%

Panorama - 0.70%

Zero-shot prediction for:

„Die bitteren Tränen der Petra von Kant“ von 1972 geschlechtsumgewandelt und neu verfilmt

International - 50.95%

Inland - 16.40%

Kultur - 7.76%

Zero-shot prediction for:

Eine 541 Millionen Jahre alte fossile Alge weist erstaunliche Ähnlichkeit zu noch heute existierenden Vertretern auf

Wissenschaft - 67.52%

Web - 8.14%

Inland - 6.91%The entire code can be found on GitHub. Besides the examples from above, you will find there also applications of zero-shot text classifications on two labeled datasets including an evaluation of the accuracy. In addition, I added some prompt-tuning by playing around with the hypothesis template.

Concluding Thoughts

Zero-shot text classification offers a suitable approach when either training data is limited (or even non-existing) or as an easy-to-implement benchmark for more sophisticated methods. While explicit approaches, like fine-tuning large pre-trained models, certainly still outperform implicit approaches, like zero-shot learning, their universal applicability makes them very appealing.

In addition, we should expect zero-shot learning, in general, to become more important over the next few years. This is because the way we will use models to solve tasks will evolve with the increasing importance of large pre-trained models. Therefore, I advocate that already today zero-shot techniques should be considered part of every modern data scientist’s toolbox.

Sources:

1 https://joeddav.github.io/blog/2020/05/29/ZSL.html

2 https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf

3 https://arxiv.org/pdf/2103.00020.pdf

4 https://arxiv.org/pdf/1909.00161.pdf

5 http://nlpprogress.com/english/natural_language_inference.html

6 https://arxiv.org/pdf/1910.13461.pdf

7 https://huggingface.co/datasets/multi_nli

8 https://arxiv.org/pdf/2006.03654.pdf

9 https://huggingface.co/datasets/xnli

10 https://huggingface.co/datasets/MoritzLaurer/multilingual-NLI-26lang-2mil7

We at work a lot with R and often use the same little helper functions within our projects. These functions ease our daily work life by reducing repetitive code parts or by creating overviews of our projects. To share these functions within our teams and with others as well, I started to collect them and created an R package out of them called . Besides sharing, I also wanted to have some use cases to improve my debugging and optimization skills. With time the package grew, and more and more functions came together. Last time I presented each function as part of an advent calendar. For our new website launch, I combined all of them into this one and will present each current function from the package.

Most functions were developed when there was a problem, and one needed a simple solution. For example, the text shown was too long and needed to be shortened (see evenstrings). Other functions exist to reduce repetitive tasks – like reading in multiple files of the same type (see read_files). Therefore, these functions might be useful to you, too!

You can check out our

1. char_replace

This little helper replaces non-standard characters (such as the German umlaut “ä”) with their standard equivalents (in this case, “ae”). It is also possible to force all characters to lower case, trim whitespaces, or replace whitespaces and dashes with underscores.

Let’s look at a small example with different settings:

x <- " Élizàldë-González Strasse"

char_replace(x, to_lower = TRUE)[1] "elizalde-gonzalez strasse"char_replace(x, to_lower = TRUE, to_underscore = TRUE)[1] "elizalde_gonzalez_strasse"char_replace(x, to_lower = FALSE, rm_space = TRUE, rm_dash = TRUE)[1] "ElizaldeGonzalezStrasse"

2. checkdir

This little helper checks a given folder path for existence and creates it if needed.

checkdir(path = "testfolder/subfolder")file.exists() and dir.create()

3. clean_gc

This little helper frees your memory from unused objects. Well, basically, it just calls gc() a few times. I used this some time ago for a project where I worked with huge data files. Even though we were lucky enough to have a big server with 500GB RAM, we soon reached its limits. Since we typically parallelize several processes, we needed to preserve every bit and byte of RAM we could get. So, instead of having many lines like this one:

gc();gc();gc();gc()clean_gc() for convenience. Internally, gc()

Some further thoughts

gc() and its usefulness. If you want to learn more about this, I suggest you check out the

4. count_na

This little helper counts missing values within a vector.

x <- c(NA, NA, 1, NaN, 0)

count_na(x)3sum(is.na(x)) counting the NA values. If you want the mean instead of the sum, you can set prop = TRUE

5. evenstrings

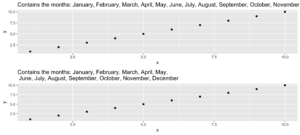

This little helper splits a given string into smaller parts with a fixed length. But why? I needed this function while creating a plot with a long title. The text was too long for one line, and I wanted to separate it nicely instead of just cutting it or letting it run over the edges.

Given a long string like…

long_title <- c("Contains the months: January, February, March, April, May, June, July, August, September, October, November, December")split = "," with a maximum length of char = 60

short_title <- evenstrings(long_title, split = ",", char = 60)The function has two possible output formats, which can be chosen by setting newlines = TRUE or FALSE:

- one string with line separators

\n - a vector with each sub-part.

Another use case could be a message that is printed at the console with cat():

cat(long_title)Contains the months: January, February, March, April, May, June, July, August, September, October, November, Decembercat(short_title)Contains the months: January, February, March, April, May,

June, July, August, September, October, November, DecemberCode for plot example

p1 <- ggplot(data.frame(x = 1:10, y = 1:10),

aes(x = x, y = y)) +

geom_point() +

ggtitle(long_title)

p2 <- ggplot(data.frame(x = 1:10, y = 1:10),

aes(x = x, y = y)) +

geom_point() +

ggtitle(short_title)

multiplot(p1, p2)6. get_files

This little helper does the same thing as the “Find in files” search within RStudio. It returns a vector with all files in a given folder that contain the search pattern. In your daily workflow, you would usually use the shortcut key SHIFT+CTRL+F. With get_files() you can use this functionality within your scripts.

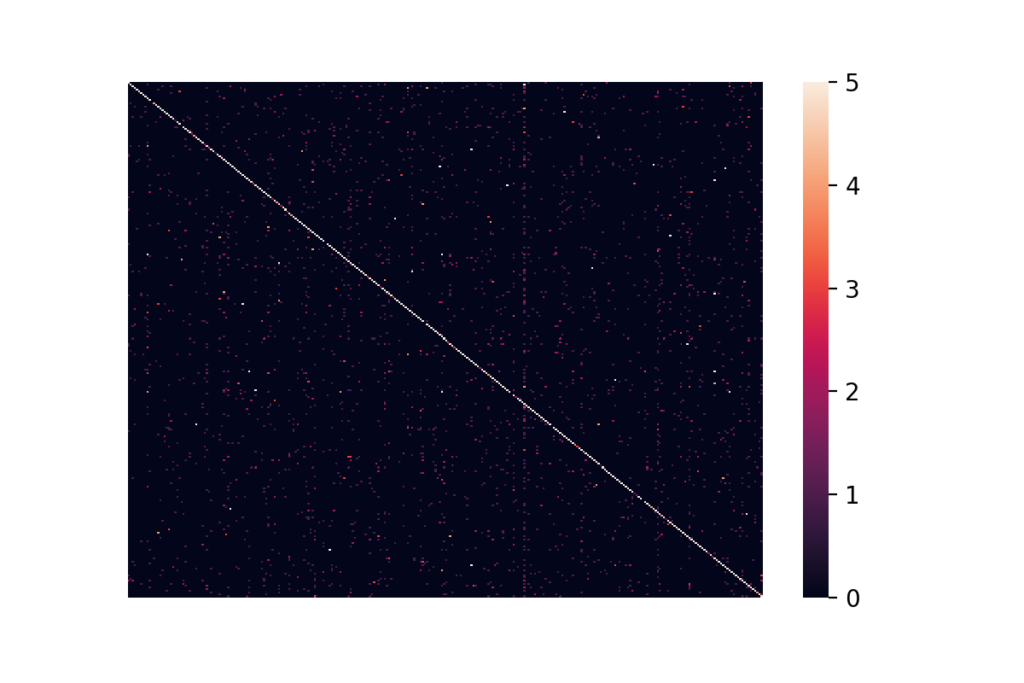

7. get_network

This little helper aims to visualize the connections between R functions within a project as a flowchart. Herefore, the input is a directory path to the function or a list with the functions, and the outputs are an adjacency matrix and an igraph object. As an example, we use :

net <- get_network(dir = "flowchart/R_network_functions/", simplify = FALSE)

g1 <- net$igraphInput

There are five parameters to interact with the function:

- A path

dirwhich shall be searched. - A character vector

variationswith the function’s definition string -the default isc(" <- function", "<- function", "<-function"). - A

patterna string with the file suffix – the default is"\\.R$". - A boolean

simplifythat removes functions with no connections from the plot. - A named list

all_scripts, which is an alternative todir. This is mainly just used for testing purposes.

For normal usage, it should be enough to provide a path to the project folder.

Output

The given plot shows the connections of each function (arrows) and also the relative size of the function’s code (size of the points). As mentioned above, the output consists of an adjacency matrix and an igraph object. The matrix contains the number of calls for each function. The igraph object has the following properties:

- The names of the functions are used as label.

- The number of lines of each function (without comments and empty ones) are saved as the size.

- The folder‘s name of the first folder in the directory.

- A color corresponding to the folder.

With these properties, you can improve the network plot, for example, like this:

library(igraph)

# create plots ------------------------------------------------------------

l <- layout_with_fr(g1)

colrs <- rainbow(length(unique(V(g1)$color)))

plot(g1,

edge.arrow.size = .1,

edge.width = 5*E(g1)$weight/max(E(g1)$weight),

vertex.shape = "none",

vertex.label.color = colrs[V(g1)$color],

vertex.label.color = "black",

vertex.size = 20,

vertex.color = colrs[V(g1)$color],

edge.color = "steelblue1",

layout = l)

legend(x = 0,

unique(V(g1)$folder), pch = 21,

pt.bg = colrs[unique(V(g1)$color)],

pt.cex = 2, cex = .8, bty = "n", ncol = 1)

8. get_sequence

This little helper returns indices of recurring patterns. It works with numbers as well as with characters. All it needs is a vector with the data, a pattern to look for, and a minimum number of occurrences.

Let’s create some time series data with the following code.

library(data.table)

# random seed

set.seed(20181221)

# number of observations

n <- 100

# simulationg the data

ts_data <- data.table(DAY = 1:n, CHANGE = sample(c(-1, 0, 1), n, replace = TRUE))

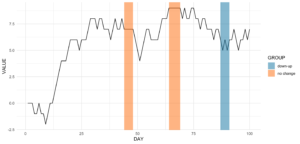

ts_data[, VALUE := cumsum(CHANGE)]This is nothing more than a random walk since we sample between going down (-1), going up (1), or staying at the same level (0). Our time series data looks like this:

Assume we want to know the date ranges when there was no change for at least four days in a row.

ts_data[, get_sequence(x = CHANGE, pattern = 0, minsize = 4)] min max

[1,] 45 48

[2,] 65 69We can also answer the question if the pattern “down-up-down-up” is repeating anywhere:

ts_data[, get_sequence(x = CHANGE, pattern = c(-1,1), minsize = 2)] min max

[1,] 88 91geom_rect

Code for the plot

rect <- data.table(

rbind(ts_data[, get_sequence(x = CHANGE, pattern = c(0), minsize = 4)],

ts_data[, get_sequence(x = CHANGE, pattern = c(-1,1), minsize = 2)]),

GROUP = c("no change","no change","down-up"))

ggplot(ts_data, aes(x = DAY, y = VALUE)) +

geom_line() +

geom_rect(data = rect,

inherit.aes = FALSE,

aes(xmin = min - 1,

xmax = max,

ymin = -Inf,

ymax = Inf,

group = GROUP,

fill = GROUP),

color = "transparent",

alpha = 0.5) +

scale_fill_manual(values = statworx_palette(number = 2, basecolors = c(2,5))) +

theme_minimal()9. intersect2

This little helper returns the intersect of multiple vectors or lists. I found this function , thought it is quite useful and adjusted it a bit.

intersect2(list(c(1:3), c(1:4)), list(c(1:2),c(1:3)), c(1:2))[1] 1 2Internally, the problem of finding the intersection is solved recursively, if an element is a list and then stepwise with the next element.

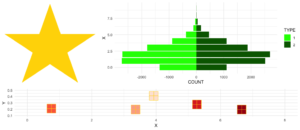

10. multiplot

This little helper combines multiple ggplots into one plot. This is a function taken from .

An advantage over facets is, that you don’t need all data for all plots within one object. Also you can freely create each single plot – which can sometimes also be a disadvantage.

With the layout parameter you can arrange multiple plots with different sizes. Let’s say you have three plots and want to arrange them like this:

1 2 2

1 2 2

3 3 3multiplot

multiplot(plotlist = list(p1, p2, p3),

layout = matrix(c(1,2,2,1,2,2,3,3,3), nrow = 3, byrow = TRUE))Code for plot example

# star coordinates

c1 = cos((2*pi)/5)

c2 = cos(pi/5)

s1 = sin((2*pi)/5)

s2 = sin((4*pi)/5)

data_star <- data.table(X = c(0, -s2, s1, -s1, s2),

Y = c(1, -c2, c1, c1, -c2))

p1 <- ggplot(data_star, aes(x = X, y = Y)) +

geom_polygon(fill = "gold") +

theme_void()

# tree

set.seed(24122018)

n <- 10000

lambda <- 2

data_tree <- data.table(X = c(rpois(n, lambda), rpois(n, 1.1*lambda)),

TYPE = rep(c("1", "2"), each = n))

data_tree <- data_tree[, list(COUNT = .N), by = c("TYPE", "X")]

data_tree[TYPE == "1", COUNT := -COUNT]

p2 <- ggplot(data_tree, aes(x = X, y = COUNT, fill = TYPE)) +

geom_bar(stat = "identity") +

scale_fill_manual(values = c("green", "darkgreen")) +

coord_flip() +

theme_minimal()

# gifts

data_gifts <- data.table(X = runif(5, min = 0, max = 10),

Y = runif(5, max = 0.5),

Z = sample(letters[1:5], 5, replace = FALSE))

p3 <- ggplot(data_gifts, aes(x = X, y = Y)) +

geom_point(aes(color = Z), pch = 15, size = 10) +

scale_color_brewer(palette = "Reds") +

geom_point(pch = 12, size = 10, color = "gold") +

xlim(0,8) +

ylim(0.1,0.5) +

theme_minimal() +

theme(legend.position="none")

11. na_omitlist

This little helper removes missing values from a list.

y <- list(NA, c(1, NA), list(c(5:6, NA), NA, "A"))There are two ways to remove the missing values, either only on the first level of the list or wihtin each sub level.

na_omitlist(y, recursive = FALSE)[[1]]

[1] 1 NA

[[2]]

[[2]][[1]]

[1] 5 6 NA

[[2]][[2]]

[1] NA

[[2]][[3]]

[1] "A"na_omitlist(y, recursive = TRUE)[[1]]

[1] 1

[[2]]

[[2]][[1]]

[1] 5 6

[[2]][[2]]

[1] "A"12. %nin%

%in%

all.equal( c(1,2,3,4) %nin% c(1,2,5),

!c(1,2,3,4) %in% c(1,2,5))[1] TRUE.

13. object_size_in_env

This little helper shows a table with the size of each object in the given environment.

If you are in a situation where you have coded a lot and your environment is now quite messy, object_size_in_env helps you to find the big fish with respect to memory usage. Personally, I ran into this problem a few times when I looped over multiple executions of my models. At some point, the sessions became quite large in memory and I did not know why! With the help of object_size_in_env and some degubbing I could locate the object that caused this problem and adjusted my code accordingly.

First, let us create an environment with some variables.

# building an environment

this_env <- new.env()

assign("Var1", 3, envir = this_env)

assign("Var2", 1:1000, envir = this_env)

assign("Var3", rep("test", 1000), envir = this_env)format(object.size()) is used. With the unit the output format can be changed (eg. "B", "MB" or "GB"

# checking the size

object_size_in_env(env = this_env, unit = "B") OBJECT SIZE UNIT

1: Var3 8104 B

2: Var2 4048 B

3: Var1 56 B14. print_fs

This little helper returns the folder structure of a given path. With this, one can for example add a nice overview to the documentation of a project or within a git. For the sake of automation, this function could run and change parts wihtin a log or news file after a major change.

If we take a look at the same example we used for the get_network function, we get the following:

print_fs("~/flowchart/", depth = 4)1 flowchart

2 ¦--create_network.R

3 ¦--getnetwork.R

4 ¦--plots

5 ¦ ¦--example-network-helfRlein.png

6 ¦ °--improved-network.png

7 ¦--R_network_functions

8 ¦ ¦--dataprep

9 ¦ ¦ °--foo_01.R

10 ¦ ¦--method

11 ¦ ¦ °--foo_02.R

12 ¦ ¦--script_01.R

13 ¦ °--script_02.R

14 °--README.md With depth we can adjust how deep we want to traverse through our folders.

15. read_files

This little helper reads in multiple files of the same type and combines them into a data.table. Which kind of file reading function should be used can be choosen by the FUN argument.

If you have a list of files, that all needs to be loaded in with the same function (e.g. read.csv), instead of using lapply and rbindlist now you can use this:

read_files(files, FUN = readRDS)

read_files(files, FUN = readLines)

read_files(files, FUN = read.csv, sep = ";")Internally, it just uses lapply and rbindlist but you dont have to type it all the time. The read_files combines the single files by their column names and returns one data.table. Why data.table? Because I like it. But, let’s not go down the rabbit hole of data.table vs dplyr ().

16. save_rds_archive

This little helper is a wrapper around base R saveRDS() and checks if the file you attempt to save already exists. If it does, the existing file is renamed / archived (with a time stamp), and the “updated” file will be saved under the specified name. This means that existing code which depends on the file name remaining constant (e.g., readRDS() calls in other scripts) will continue to work while an archived copy of the – otherwise overwritten – file will be kept.

17. sci_palette

This little helper returns a set of colors which we often use at statworx. So, if – like me – you cannot remeber each hex color code you need, this might help. Of course these are our colours, but you could rewrite it with your own palette. But the main benefactor is the plotting method – so you can see the color instead of only reading the hex code.

To see which hex code corresponds to which colour and for what purpose to use it

sci_palette(scheme = "new")Tech Blue Black White Light Grey Accent 1 Accent 2 Accent 3

"#0000FF" "#000000" "#FFFFFF" "#EBF0F2" "#283440" "#6C7D8C" "#B6BDCC"

Highlight 1 Highlight 2 Highlight 3

"#00C800" "#FFFF00" "#FE0D6C"

attr(,"class")

[1] "sci"plot()

plot(sci_palette(scheme = "new"))

18. statusbar

This little helper prints a progress bar into the console for loops.

There are two nessecary parameters to feed this function:

runis either the iterator or its numbermax.runis either all possible iterators in the order they are processed or the maximum number of iterations.

So for example it could be run = 3 and max.run = 16 or run = "a" and max.run = letters[1:16].

Also there are two optional parameter:

percent.maxinfluences the width of the progress barinfois an additional character, which is printed at the end of the line. By default it isrun.

A little disadvantage of this function is, that it does not work with parallel processes. If you want to have a progress bar when using apply functions check out .

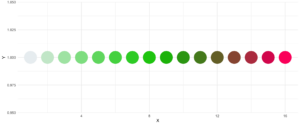

19. statworx_palette

This little helper is an addition to yesterday’s . We picked colors 1, 2, 3, 5 and 10 to create a flexible color palette. If you need 100 different colors – say no more!

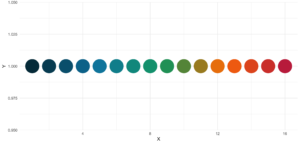

In contrast to sci_palette() the return value is a character vector. For example if you want 16 colors:

statworx_palette(16, scheme = "old")[1] "#013848" "#004C63" "#00617E" "#00759A" "#0087AB" "#008F9C" "#00978E" "#009F7F"

[9] "#219E68" "#659448" "#A98B28" "#ED8208" "#F36F0F" "#E45A23" "#D54437" "#C62F4B"If we now plot those colors, we get this nice rainbow like gradient.

library(ggplot2)

ggplot(plot_data, aes(x = X, y = Y)) +

geom_point(pch = 16, size = 15, color = statworx_palette(16, scheme = "old")) +

theme_minimal()

reorder parameter, which samples the color’s order so that neighbours might be a bit more distinguishable. Also if you want to change the used colors, you can do so with basecolors

ggplot(plot_data, aes(x = X, y = Y)) +

geom_point(pch = 16, size = 15,

color = statworx_palette(16, basecolors = c(4,8,10), scheme = "new")) +

theme_minimal()20. strsplit

This little helper adds functionality to the base R function strsplit – hence the same name! It is now possible to split before, after or between a given delimiter. In the case of between you need to specify two delimiters.

An earlier version of this function can be found , where I describe the used regular expressions, if you are interested.

Here is a little example on how to use the new strsplit.

text <- c("This sentence should be split between should and be.")

strsplit(x = text, split = " ")

strsplit(x = text, split = c("should", " be"), type = "between")

strsplit(x = text, split = "be", type = "before")[[1]]

[1] "This" "sentence" "should" "be" "split" "between" "should" "and"

[9] "be."

[[1]]

[1] "This sentence should" " be split between should and be."

[[1]]

[1] "This sentence should " "be split " "between should and "

[4] "be."21. to_na

This little helper is just a convenience function. Some times during your data preparation, you have a vector with infinite values like Inf or -Inf or even NaN values. Thos kind of value can (they do not have to!) mess up your evaluation and models. But most functions do have a tendency to handle missing values. So, this little helper removes such values and replaces them with NA.

A small exampe to give you the idea:

test <- list(a = c("a", "b", NA),

b = c(NaN, 1,2, -Inf),

c = c(TRUE, FALSE, NaN, Inf))

lapply(test, to_na)$a

[1] "a" "b" NA

$b

[1] NA 1 2 NA

$c

[1] TRUE FALSE NA Since there are different types of NA depending on the other values within a vector. You might want to check the format if you do to_na

test <- list(NA, c(NA, "a"), c(NA, 2.3), c(NA, 1L))

str(test)List of 4

$ : logi NA

$ : chr [1:2] NA "a"

$ : num [1:2] NA 2.3

$ : int [1:2] NA 122. trim

This little helper removes leading and trailing whitespaces from a string. With trimws was introduced, which does the exact same thing. This just shows, it was not a bad idea to write such a function. 😉

x <- c(" Hello world!", " Hello world! ", "Hello world! ")

trim(x, lead = TRUE, trail = TRUE)[1] "Hello world!" "Hello world!" "Hello world!"The lead and trail parameters indicates if only leading, trailing or both whitspaces should be removed.

Conclusion

I hope that the helfRlein package makes your work as easy as it is for us here at statworx. If you have any questions or input about the package, please send us an email to: blog@statworx.com

In the field of Data Science – as the name suggests – the topic of data, from data cleaning to feature engineering, is one of the cornerstones. Having and evaluating data is one thing, but how do you actually get data for new problems?

If you are lucky, the data you need is already available. Either by downloading a whole dataset or by using an API. Often, however, you have to gather information from websites yourself – this is called web scraping. Depending on how often you want to scrape data, it is advantageous to automate this step.

This post will be about exactly this automation. Using web scraping and GitHub Actions as an example, I will show how you can create your own data sets over a more extended period. The focus will be on the experience I have gathered over the last few months.

The code I used and the data I collected can be found in this GitHub repository.

Search for data – the initial situation

During my research for the blog post about gasoline prices, I also came across data on the utilization of parking garages in Frankfurt am Main. Obtaining this data laid the foundation for this post. After some thought and additional research, other thematically appropriate data sources came to mind:

- Road utilization

- S-Bahn and subway delays

- Events nearby

- Weather data

However, it quickly became apparent that I could not get all this data, as it is not freely available or allowed to be stored. Since I planned to store the collected data on GitHub and make it available, this was a crucial point for which data came into question. For these reasons, railway data fell out completely. I only found data for Cologne for road usage, and I wanted to avoid using the Google API as that definitely brings its own challenges. So, I was left with event and weather data.

For the weather data of the German Weather Service, the rdwd package can be used. Since this data is already historized, it is irrelevant for this blog post. The GitHub Actions have proven to be very useful to get the remaining event and park data, even if they are not entirely trivial to use. Especially the fact that they can be used free of charge makes them a recommendable tool for such projects.

Scraping the data

Since this post will not deal with the details of web scraping, I refer you here to the post by my colleague David.

The parking data is available here in XML format and is updated every 5 minutes. Once you understand the structure of the XML, it’s a simple matter of accessing the right index, and you have the data you want. In the function get_parking_data(), I have summarized everything I need. It creates a record for the area and a record for the individual parking garages.

Example data extract area

parkingAreaOccupancy;parkingAreaStatusTime;parkingAreaTotalNumberOfVacantParkingSpaces;

totalParkingCapacityLongTermOverride;totalParkingCapacityShortTermOverride;id;TIME

0.08401977;2021-12-01T01:07:00Z;556;150;607;1[Anlagenring];2021-12-01T01:07:02.720Z

0.31417114;2021-12-01T01:07:00Z;513;0;748;4[Bahnhofsviertel];2021-12-01T01:07:02.720Z

0.351417;2021-12-01T01:07:00Z;801;0;1235;5[Dom / Römer];2021-12-01T01:07:02.720Z

0.21266666;2021-12-01T01:07:00Z;1181;70;1500;2[Zeil];2021-12-01T01:07:02.720ZExample data extract facility

parkingFacilityOccupancy;parkingFacilityStatus;parkingFacilityStatusTime;

totalNumberOfOccupiedParkingSpaces;totalNumberOfVacantParkingSpaces;

totalParkingCapacityLongTermOverride;totalParkingCapacityOverride;

totalParkingCapacityShortTermOverride;id;TIME

0.02;open;2021-12-01T01:02:00Z;4;196;150;350;200;24276[Turmcenter];2021-12-01T01:07:02.720Z

0.11547912;open;2021-12-01T01:02:00Z;47;360;0;407;407;18944[Alte Oper];2021-12-01T01:07:02.720Z

0.0027472528;open;2021-12-01T01:02:00Z;1;363;0;364;364;24281[Hauptbahnhof Süd];2021-12-01T01:07:02.720Z

0.609375;open;2021-12-01T01:02:00Z;234;150;0;384;384;105479[Baseler Platz];2021-12-01T01:07:02.720ZFor the event data, I scrape the page stadtleben.de. Since it is a HTML that is quite well structured, I can access the tabular event overview via the tag “kalenderListe”. The result is created by the function get_event_data().

Example data extract event

eventtitle;views;place;address;eventday;eventdate;request

Magical Sing Along - Das lustigste Mitsing-Event;12576;Bürgerhaus;64546 Mörfelden-Walldorf, Westendstraße 60;Freitag;2022-03-04;2022-03-04T02:24:14.234833Z

Velvet-Bar-Night;1460;Velvet Club;60311 Frankfurt, Weißfrauenstraße 12-16;Freitag;2022-03-04;2022-03-04T02:24:14.234833Z

Basta A-cappella-Band;465;Zeltpalast am Deutsche Bank Park;60528 Frankfurt am Main, Mörfelder Landstraße 362;Freitag;2022-03-04;2022-03-04T02:24:14.234833Z

BeThrifty Vintage Kilo Sale | Frankfurt | 04. & 05. …;1302;Batschkapp;60388 Frankfurt am Main, Gwinnerstraße 5;Freitag;2022-03-04;2022-03-04T02:24:14.234833Z

Automation of workflows – GitHub Actions

The basic framework is in place. I have a function that writes the park and event data to a .csv file when executed. Since I want to query the park data every 5 minutes and the event data three times a day for security, GitHub Actions come into play.

With this function of GitHub, workflows can be scheduled and executed in addition to actions triggered during merging or committing. For this purpose, a .yml file is created in the folder /.github/workflows.

The main components of my workflow are:

- The

schedule– Every ten minutes, the functions should be executed - The OS – Since I develop locally on a Mac, I use the

macOS-latesthere. - Environment variables – This contains my GitHub token and the path for the package management

renv. - The individual

stepsin the workflow itself.

The workflow goes through the following steps:

- Setup R

- Load packages with renv

- Run script to scrape data

- Run script to update the README

- Pushing the new data back into git

Each of these steps is very small and clear in itself; however, as is often the case, the devil is in the details.

Limitation and challenges

Over the last few months, I’ve been tweaking and optimizing my workflow to deal with the bugs and issues. In the following, you will find an overview of my condensed experiences with GitHub Actions from the last months.

Schedule problems

If you want to perform time-critical actions, you should use other services. GitHub Action does not guarantee that the jobs will be timed exactly (or, in some cases, that they will be executed at all).

| Time span in minutes | <= 5 | <= 10 | <= 20 | <= 60 | > 60 |

| Number of queries | 1720 | 2049 | 5509 | 3023 | 194 |

You can see that the planned five-minute intervals were not always adhered to. I should plan a larger margin here in the future.

Merge conflicts

In the beginning, I had two workflows, one for the park data and one for the events. If they overlapped in time, there were merge conflicts because both processes updated the README with a timestamp. Over time, I switched to a workflow including error handling.

Even if one run took longer and the next one had already started, there were merge conflicts in the .csv data when pushing. Long runs were often caused by the R setup and the loading of the packages. Consequently, I extended the schedule interval from five to ten minutes.

Format adjustments

There were a few situations where the paths or structure of the scraped data changed, so I had to adjust my functions. Here the setting to get an email if a process failed was very helpful.

Lack of testing capabilities

There is no way to test a workflow script other than to run it. So, after a typo in the evening, one can wake up to a flood of emails with spawned runs in the morning. Still, that shouldn’t stop you from doing a local test run.

No data update

Since the end of December, the parking data has not been updated or made available. This shows that even if you have an automatic process, you should still continue to monitor it. I only noticed this later, which meant that my queries at the end of December always went nowhere.

Conclusion

Despite all these complications, I still consider the whole thing a massive success. Over the last few months, I’ve been studying the topic repeatedly and have learned the tricks described above, which will also help me solve other problems in the future. I hope that all readers of this blog post could also take away some valuable tips and thus learn from my mistakes.

Since I have now collected a good half-year of data, I can deal with the evaluation. But this will be the subject of another blog post.

Introduction

The more complex any given data science project in Python gets, the harder it usually becomes to keep track of how all modules interact with each other. Undoubtedly, when working in a team on a bigger project, as is often the case here at STATWORX, the codebase can soon grow to an extent where the complexity may seem daunting. In a typical scenario, each team member works in their “corner” of the project, leaving each one merely with firm local knowledge of the project’s code but possibly only a vague idea of the overall project architecture. Ideally, however, everyone involved in the project should have a good global overview of the project. By that, I don’t mean that one has to know how each function works internally but rather to know the responsibility of the main modules and how they are interconnected.

A visual helper for learning about the global structure can be a call graph. A call graph is a directed graph that displays which function calls which. It is created from the data of a Python profiler such as cProfile.

Since such a graph proved helpful in a project I’m working on, I created a package called project_graph, which builds such a call graph for any provided python script. The package creates a profile of the given script via cProfile, converts it into a filtered dot graph via gprof2dot, and finally exports it as a .png file.

Why Are Project Graphs Useful?

As a small first example, consider this simple module.

# test_script.py

import time

from tests.goodnight import sleep_five_seconds

def sleep_one_seconds():

time.sleep(1)

def sleep_two_seconds():

time.sleep(2)

for i in range(3):

sleep_one_seconds()

sleep_two_seconds()

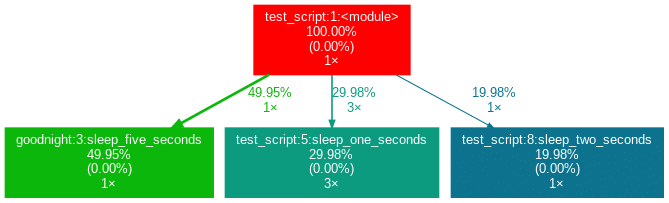

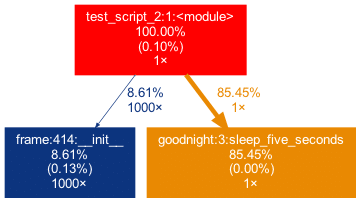

sleep_five_seconds()After installation (see below), by writing project_graph test_script.py into the command line, the following png-file is placed next to the script:

The script to be profiled always acts as a starting point and is the root of the tree. Each box is captioned with a function’s name, the overall percentage of time spent in the function, and its number of calls. The number in brackets represents the time spent in the function’s code, excluding time spent in other functions that are called in it.

In this case, all time is spent in the external module time‘s function sleep, which is why the number is 0.00%. Rarely a lot of time is spent in self-written functions, as the workload of a script usually quickly trickles down to very low-level functions of the Python implementation itself. Also, next to the arrows is the amount of time that one function passes to the other, along with the number of calls. The colors (RED-GREEN-BLUE, descending) and the thickness of the arrows indicate the relevance of different spots in the program.

Note that the percentages of the three functions above don’t add up to 100%. The reason behind is is that the graph is set up to only include self-written functions. In this case, the importing the time module caused the Python interpreter to spend 0.04% time in a function of the module importlib.

Evaluation with External Packages

Consider a second example:

# test_script_2.py

import pandas as pd

from tests.goodnight import sleep_five_seconds

# some random madness

for i in range(1000):

a_frame = pd.DataFrame([[1,2,3]])

sleep_five_seconds()capture this in the graph, we can add the external package (pandas) with the -x flag. However, initializing a Pandas DataFrame is done within many pandas-internal functions. Frankly, I am personally not interested in the inner convolutions of pandas which is why I want the tree to not “sprout” too deep into the pandas mechanics. This can be accounted for by allowing only functions to show up if a minimal percentage of the runtime is spent in them.

Exactly this can be done using the -mflag. In combination, project_graph -m 8 -x pandas test_script_2.py yields the following:

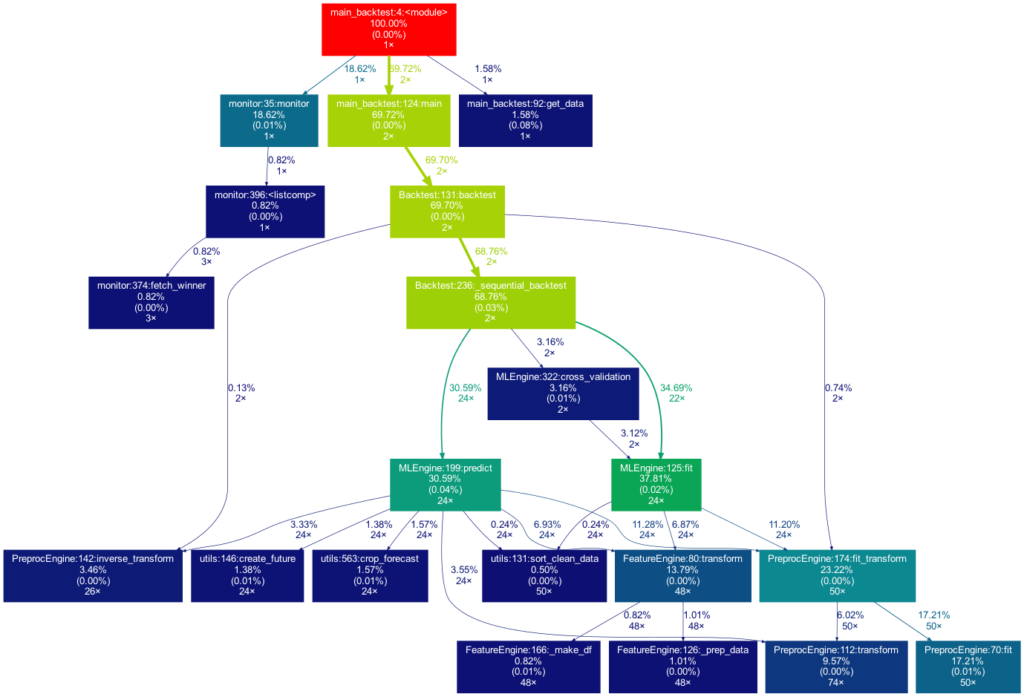

Toy examples aside, let’s move on to something more serious. A real-life data-science project could look like this one:

This time the tree is much bigger. It is actually even bigger than what you see in the illustration, as many more self-written functions are invoked. However, they are trimmed from the tree for clarity, as functions in which less than 0.5 % of the overall time is spent are filtered out (this is the default setting for the -m flag). Note that such a graph also really shines when searching for performance bottlenecks. One can see right away which functions carry most of the workload, when they are called, and how often they are called. This may prevent you from optimizing your program in the wrong spots while ignoring the elephant in the room.

How to Use Project Graphs

Installation

Within your project’s environment, do the following:

brew install graphviz

pip install git+https://github.com/fior-di-latte/project_graph.gitUsage

Within your project’s environment, change your current working directory to the project’s root (this is important!) and then enterfor standard usage:

project_graph myscript.pyIf your script includes an argparser, use:

project_graph "myscript.py <arg1> <arg2> (...)"If you want to see the entire graph, including all external packages, use:

project_graph -a myscript.pyIf you want to use a visibility threshold other than 1%, use:

project_graph -m <percent_value> myscript.pyFinally, if you want to include external packages into the graph, you can specify them as follows:

project_graph -x <package1> -x <package2> (...) myscript.pyConclusion & Caveats

This package has certain weaknesses, most of which can be addressed, e.g., by formatting the code into a function-based style, by trimming with the -m flag, or adding packages by using the -x flag. Generally, if something seems odd, the best first step is probably to use the -a flag to debug. Significant caveats are the following:

- It only works on Unix systems.

- It does not show a truthful graph when used with multiprocessing. The reason behind that is that cProfile is not compatible with multiprocessing. If multiprocessing is used, only the root process will be profiled, leading to false computation times in the graph. Switch to a non-parallel version of the target script.

- Profiling a script can lead to a considerable overhead computation-wise. It can make sense to scale down the work done in your script (i.e., decrease the amount of input data). If so, the time spent in the functions, of course, can be distorted massively if the functions don’t scale linearly.

- Nested functions will not show up in the graph. In particular, a decorator implicitly nests your function and will thus hide your function. That said, when using an external decorator, don’t forget to add the decorator’s package via the

-xflag (for example,project_graph -x numba myscript.py). - If your self-written function is exclusively called from an external package’s function, you must manually add the external package with the

-xflag. Otherwise, your function will not show up in the tree, as its parent is an external function and thus not considered.

Feel free to use the little package for your own project, be it for performance analysis, code introductions for new team members, or out of sheer curiosity. As for me, I find it very satisfying to see such a visualization of my projects. If you have trouble using it, don’t hesitate to hit me up on Github.

PS: If you’re looking for a similar package in R, check out Jakob’s post on flowcharts of functions.

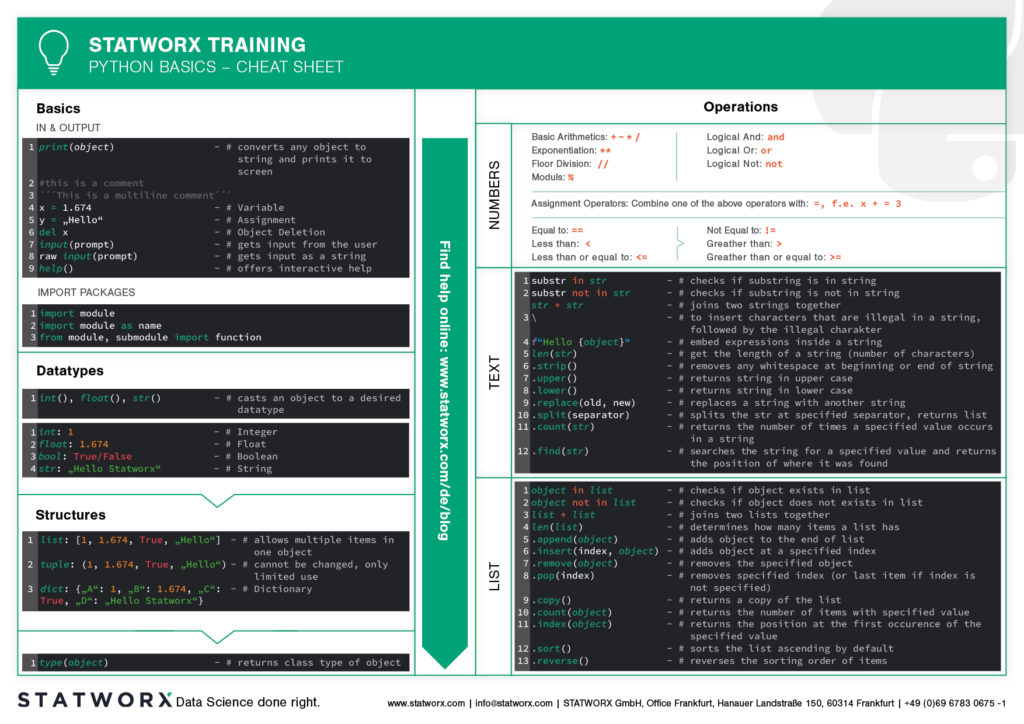

Do you want to learn Python? Or are you an R pro and you regularly miss the important functions and commands when working with Python? Or maybe you need a little reminder from time to time while coding? That’s exactly why cheatsheets were invented!

Cheatsheets help you in all these situations. Our first cheatsheet with Python basics is the start of a new blog series, where more cheatsheets will follow in our unique STATWORX style.

So you can be curious about our series of new Python cheatsheets that will cover basics as well as packages and workspaces relevant to Data Science.

Our cheatsheets are freely available for you to download, without registration or any other paywall.

Why have we created new cheatsheets?

As an experienced R user you will search endlessly for state-of-the-art Python cheatsheets similiar to those known from R Studio.

Sure, there are a lot of cheatsheets for every topic, but they differ greatly in design and content. As soon as we use several cheatsheets in different designs, we have to reorientate ourselves again and again and thus lose a lot of time in total. For us as data scientists it is important to have uniform cheatsheets where we can quickly find the desired function or command.

We want to counteract this annoying search for information. Therefore, we would like to regularly publish new cheatsheets in a design language on our blog in the future – and let you all participate in this work relief.

What does the first cheatsheet contain?

Our first cheatsheet in this series is aimed primarily at Python novices, R users who use Python less often, or peoples who are just starting to use it. It facilitates the introduction and overview in Python.

It makes it easier to get started and get an overview of Python. Basic syntax, data types, and how to use them are introduced, and basic control structures are introduced. This way, you can quickly access the content you learned in our STATWORX Academy, for example, or recall the basics for your next programming project.

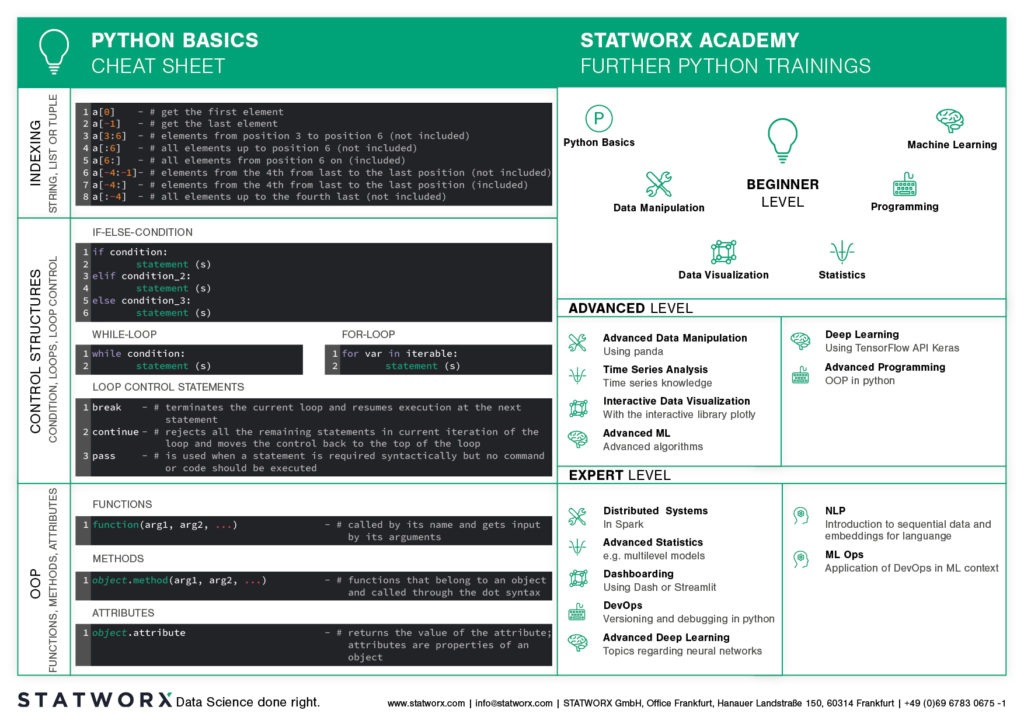

What does the STATWORX Cheatsheet Episode 2 cover?

The next cheatsheet will cover the first step of a data scientist in a new project: Data Wrangling. Also, you can expect a cheatsheet for pandas about data loading, selection, manipulation, aggregation and merging. Happy coding!

In the , we discussed transfer learning and built a model for car model classification. In this blog post, we will discuss the problem of model deployment, using the TransferModel introduced in the first post as an example.

A model is of no use in actual practice if there is no simple way to interact with it. In other words: We need an API for our models. TensorFlow Serving has been developed to provide these functionalities for TensorFlow models. This blog post will show how a TensorFlow Serving server can be launched in a Docker container and how we can interact with the server using HTTP requests.

If you are new to Docker, we recommend working through Docker’s before reading this article. If you want to look at an example of deployment in Docker, we recommend reading this , in which he describes how an R-script can be run in Docker. We start by giving an overview of TensorFlow Serving.

Introduction to TensorFlow Serving

Let’s start by giving you an overview of TensorFlow Serving.

TensorFlow Serving is TensorFlow’s serving system, designed to enable the deployment of various models using a uniform API. Using the abstraction of Servables, which are basically objects clients use to perform computations, it is possible to serve multiple versions of deployed models. That enables, for example, that a new version of a model can be uploaded while the previous version is still available to clients. Looking at the bigger picture, so-called Managers are responsible for handling the life-cycle of Servables, which means loading, serving, and unloading them.

In this post, we will show how a single model version can be deployed. The code examples below show how a server can be started in a Docker container and how the Predict API can be used to interact with it. To read more about TensorFlow Serving, we refer to the .

Implementation

We will now discuss the following three steps required to deploy the model and to send requests.

- Save a model in correct format and folder structure using TensorFlow SavedModel

- Run a Serving server inside a Docker container

- Interact with the model using REST requests

Saving TensorFlow Models

If you didn’t read this series’ first post, here’s a brief summary of the most important points needed to understand the code below:

The TransferModel.model is a tf.keras.Model instance, so it can be saved using Model‘s built-in save method. Further, as the model was trained on web-scraped data, the class labels can change when re-scraping the data. We thus store the index-class mapping when storing the model in classes.pickle. TensorFlow Serving requires the model to be stored in the . When using tf.keras.Model.save, the path must be a folder name, else the model will be stored in another format (e.g., HDF5) which is not compatible with TensorFlow Serving. Below, folderpath contains the path of the folder we want to store all model relevant information in. The SavedModel is stored in folderpath/model and the class mapping is stored as folderpath/classes.pickle.

def save(self, folderpath: str):

"""

Save the model using tf.keras.model.save

Args:

folderpath: (Full) Path to folder where model should be stored

"""

# Make sure folderpath ends on slash, else fix

if not folderpath.endswith("/"):

folderpath += "/"

if self.model is not None:

os.mkdir(folderpath)

model_path = folderpath + "model"

# Save model to model dir

self.model.save(filepath=model_path)

# Save associated class mapping

class_df = pd.DataFrame({'classes': self.classes})

class_df.to_pickle(folderpath + "classes.pickle")

else:

raise AttributeError('Model does not exist')Start TensorFlow Serving in Docker Container

Having saved the model to the disk, you now need to start the TensorFlow Serving server. Fortunately, there is an easy-to-use Docker container available. The first step is therefore pulling the TensorFlow Serving image from DockerHub. That can be done in the terminal using the command docker pull tensorflow/serving.

Then we can use the code below to start a TensorFlow Serving container. It runs the shell command for starting a container. The options set in the docker_run_cmd are the following:

- The serving image exposes port 8501 for the REST API, which we will use later to send requests. Thus we map the host port 8501 to the container’s 8501 port using

-p. - Next, we mount our model to the container using

-v. It is essential that the model is stored in a versioned folder (here MODEL_VERSION=1); else, the serving image will not find the model.model_path_guestthus must be of the form<path>/<model name>/MODEL_VERSION, whereMODEL_VERSIONis an integer. - Using

-e, we can set the environment variableMODEL_NAMEto our model’s name. - The

--name tf_servingoption is only needed to assign a specific name to our new docker container.

If we try to run this file twice in a row, the docker command will not be executed the second time, as a container with the name tf_serving already exists. To avoid this problem, we use docker_run_cmd_cond. Here, we first check if a container with this specific name already exists and is running. If it does, we leave it; if not, we check if an exited version of the container exists. If it does, it is deleted, and a new container is started; if not, a new one is created directly.

import os

MODEL_FOLDER = 'models'

MODEL_SAVED_NAME = 'resnet_unfreeze_all_filtered.tf'

MODEL_NAME = 'resnet_unfreeze_all_filtered'

MODEL_VERSION = '1'

# Define paths on host and guest system

model_path_host = os.path.join(os.getcwd(), MODEL_FOLDER, MODEL_SAVED_NAME, 'model')

model_path_guest = os.path.join('/models', MODEL_NAME, MODEL_VERSION)

# Container start command

docker_run_cmd = f'docker run ' \

f'-p 8501:8501 ' \

f'-v {model_path_host}:{model_path_guest} ' \

f'-e MODEL_NAME={MODEL_NAME} ' \

f'-d ' \

f'--name tf_serving ' \

f'tensorflow/serving'

# If container is not running, create a new instance and run it

docker_run_cmd_cond = f'if [ ! "$(docker ps -q -f name=tf_serving)" ]; then \n' \

f' if [ "$(docker ps -aq -f status=exited -f name=tf_serving)" ]; then \n' \

f' docker rm tf_serving \n' \

f' fi \n' \

f' {docker_run_cmd} \n' \

f'fi'

# Start container

os.system(docker_run_cmd_cond)Instead of mounting the model from our local disk using the -v flag in the docker command, we could also copy the model into the docker image, so the model could be served simply by running a container and specifying the port assignments. It is important to note that, in this case, the model needs to be saved using the folder structure folderpath/<model name>/1, as explained above. If this is not the case, TensorFlow Serving will not find the model. We will not go into further detail here. If you are interested in deploying your models in this way, we refer to on the TensorFlow website.

REST Request

Since the model is now served and ready to use, we need a way to interact with it. TensorFlow Serving provides two options to send requests to the server: and REST API, both exposed at different ports. In the following code example, we will use REST to query the model.

First, we load an image from the disk for which we want a prediction. This can be done using TensorFlow’s image module. Next, we convert the image to a numpy array using the img_to_array-method. The next and final step is crucial: since we preprocessed the training image before we trained our model (e.g., normalization), we need to apply the same transformation to the image we want to predict. The handypreprocess_input function makes sure that all necessary transformations are applied to our image.

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.resnet_v2 import preprocess_input

# Load image

img = image.load_img(path, target_size=(224, 224))

img = image.img_to_array(img)

# Preprocess and reshape dataimport json

import requests

# Send image as list to TF serving via json dump

request_url = 'http://localhost:8501/v1/models/resnet_unfreeze_all_filtered:predict'

request_body = json.dumps({"signature_name": "serving_default", "instances": img.tolist()})

request_headers = {"content-type": "application/json"}

json_response = requests.post(request_url, data=request_body, headers=request_headers)

response_body = json.loads(json_response.text)

predictions = response_body['predictions']

# Get label from prediction

y_hat_idx = np.argmax(predictions)

y_hat = classes[y_hat_idx]img = preprocess_input(img) img = img.reshape(-1, *img.shape)TensorFlow Serving’s RESTful API offers several endpoints. In general, the API accepts post requests following this structure:

POST http://host:port/<URI>:<VERB>

URI: /v1/models/${MODEL_NAME}[/versions/${MODEL_VERSION}]

VERB: classify|regress|predictFor our model, we can use the following URL for predictions:

The port number (here 8501) is the host’s port we specified above to map to the serving image’s port 8501. As mentioned above, 8501 is the serving container’s port exposed for the REST API. The model version is optional and will default to the latest version if omitted.

In python, the requests library can be used to send HTTP requests. As stated in the , the request body for the predict API must be a JSON object with the below-listed key-value-pairs:

signature_name– serving signature to use (for more information, see the )instances– model input in row format

The response body will also be a JSON object with a single key called predictions. Since we get for each row in the instances the probability for all 300 classes, we use np.argmax to return the most likely class. Alternatively, we could have used the higher-level classify API.

Conclusion

In this second blog article of the Car Model Classification series, we learned how to deploy a TensorFlow model for image recognition using TensorFlow Serving as a RestAPI, and how to run model queries with it.

To do so, we first saved the model using the SavedModel format. Next, we started the TensorFlow Serving server in a Docker container. Finally, we showed how to request predictions from the model using the API endpoints and a correct specified request body.

A major criticism of deep learning models of any kind is the lack of explainability of the predictions. In the third blog post, we will show how to explain model predictions using a method called Grad-CAM.

At STATWORX, we are very passionate about the field of deep learning. In this blog series, we want to illustrate how an end-to-end deep learning project can be implemented. We use TensorFlow 2.x library for the implementation. The topics of the series include:

- Transfer learning for computer vision.

- Model deployment via TensorFlow Serving.

- Interpretability of deep learning models via Grad-CAM.

- Integrating the model into a Dash dashboard.

In the first part, we will show how you can use transfer learning to tackle car image classification. We start by giving a brief overview of transfer learning and the ResNet and then go into the implementation details. The code presented can be found in this github repository.

Introduction: Transfer Learning & ResNet

What is Transfer Learning?

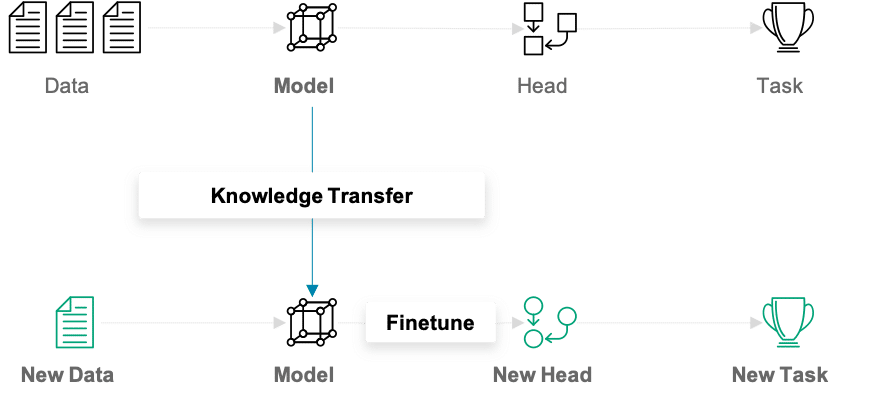

In traditional (machine) learning, we develop a model and train it on new data for every new task at hand. Transfer learning differs from this approach in that knowledge is transferred from one task to another. It is a useful approach when one is faced with the problem of too little available training data. Models that are pretrained for a similar problem can be used as a starting point for training new models. The pretrained models are referred to as base models.

In our example, a deep learning model trained on the ImageNet dataset can be used as the starting point for building a car model classifier. The main idea behind transfer learning for deep learning models is that the first layers of a network are used to extract important high-level features, which remain similar for the kind of data treated. The final layers (also known as the head) of the original network are replaced by a custom head suitable for the problem at hand. The weights in the head are initialized randomly, and the resulting network can be trained for the specific task.

There are various ways in which the base model can be treated during training. In the first step, its weights can be fixed. If the learning progress suggests the model not being flexible enough, certain layers or the entire base model can be “unfrozen” and thus made trainable. A further important aspect to note is that the input must be of the same dimensionality as the data on which the model was trained on – if the first layers of the base model are not modified.

Next, we will briefly introduce the ResNet, a popular and powerful CNN architecture for image data. Then, we will show how we used transfer learning with ResNet to do car model classification.

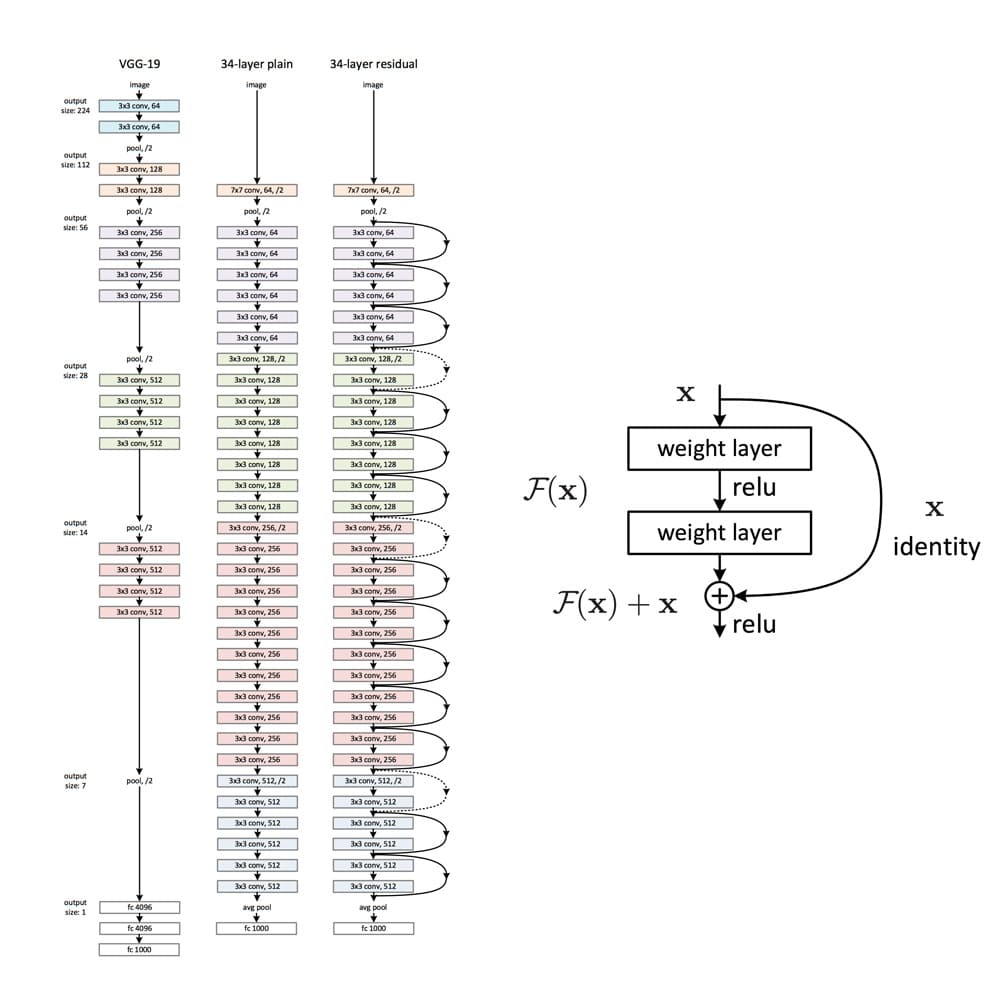

What is ResNet?

Training deep neural networks can quickly become challenging due to the so-called vanishing gradient problem. But what are vanishing gradients? Neural networks are commonly trained using back-propagation. This algorithm leverages the chain rule of calculus to derive gradients at deeper layers of the network by multiplying gradients from earlier layers. Since gradients get repeatedly multiplied in deep networks, they can quickly approach infinitesimally small values during back-propagation.

ResNet is a CNN network that solves the vanishing gradient problem using so-called residual blocks (you find a good explanation of why they are called ‘residual’ here). The unmodified input is passed on to the next layer in the residual block by adding it to a layer’s output (see right figure). This modification makes sure that a better information flow from the input to the deeper layers is possible. The entire ResNet architecture is depicted in the right network in the left figure below. It is plotted alongside a plain CNN and the VGG-19 network, another standard CNN architecture.

ResNet has proved to be a powerful network architecture for image classification problems. For example, an ensemble of ResNets with 152 layers won the ILSVRC 2015 image classification contest. Pretrained ResNet models of different sizes are available in the tensorflow.keras.application module, namely ResNet50, ResNet101, ResNet152 and their corresponding second versions (ResNet50V2, …). The number following the model name denotes the number of layers the networks have. The available weights are pretrained on the ImageNet dataset. The models were trained on large computing clusters using hardware accelerators for significant time periods. Transfer learning thus enables us to leverage these training results using the obtained weights as a starting point.

Classifying Car Models

As an illustrative example of how transfer learning can be applied, we treat the problem of classifying the car model given an image of the car. We will start by describing the dataset set we used and how we can filter out unwanted examples in the dataset. Next, we will go over how a data pipeline can be setup using tensorflow.data. In the second section, we will talk you through the model implementation and point out what aspects to be particularly careful about during training and prediction.

Data Preparation

We used the dataset described in this github repo, where you can also download the entire dataset. The author built a datascraper to scrape all car images from the car connection website. He explains that many images are from the interior of the cars. As they are not wanted in the dataset, they are filtered out based on pixel color. The dataset contains 64’467 jpg images, where the file names contain information on the car’s make, model, build year, etc. For a more detailed insight on the dataset, we recommend you consult the original github repo. Three sample images are shown below.

While checking through the data, we observed that the dataset still contained many unwanted images, e.g., pictures of wing mirrors, door handles, GPS panels, or lights. Examples of unwanted images can be seen below.

Thus, it is beneficial to additionally prefilter the data to clean out more of the unwanted images.

Filtering Unwanted Images Out of the Dataset

There are multiple possible approaches to filter non-car images out of the dataset:

- Use a pretrained model

- Train another model to classify car/no-car

- Train a generative network on a car dataset and use the discriminator part of the network

We decided to pursue the first approach since it is the most direct one and outstanding pretrained models are easily available. If you want to follow the second or third approach, you could, e.g., use this dataset to train the model. The referred dataset only contains images of cars but is significantly smaller than the dataset we used.

We chose the ResNet50V2 in the tensorflow.keras.applications module with the pretrained “imagenet” weights. In a first step, we must figure out the indices and classnames of the imagenet labels corresponding to car images.

# Class labels in imagenet corresponding to cars

CAR_IDX = [656, 627, 817, 511, 468, 751, 705, 757, 717, 734, 654, 675, 864, 609, 436]

CAR_CLASSES = ['minivan', 'limousine', 'sports_car', 'convertible', 'cab', 'racer', 'passenger_car', 'recreational_vehicle', 'pickup', 'police_van', 'minibus', 'moving_van', 'tow_truck', 'jeep', 'landrover', 'beach_wagon']

Next, the pretrained ResNet50V2 model is loaded.

from tensorflow.keras.applications import ResNet50V2

model = ResNet50V2(weights='imagenet')

We can then use this model to make predictions for images. The images fed to the prediction method must be scaled identically to the images used for training. The different ResNet models are trained on different input scales. It is thus essential to apply the correct image preprocessing. The module keras.application.resnet_v2 contains the method preprocess_input, which should be used when using a ResNetV2 network. This method expects the image arrays to be of type float and have values in [0, 255]. Using the appropriately preprocessed input, we can then use the built-in predict method to obtain predictions given an image stored at filename:

from tensorflow.keras.applications.resnet_v2 import preprocess_input

image = tf.io.read_file(filename)

image = tf.image.decode_jpeg(image)

image = tf.cast(image, tf.float32)

image = tf.image.resize_with_crop_or_pad(image, target_height=224, target_width=224)

image = preprocess_input(image)

predictions = model.predict(image)

There are various ideas of how the obtained predictions can be used for car detection.

- Is one of the

CAR_CLASSESamong the top k predictions? - Is the accumulated probability of the

CAR_CLASSESin the predictions greater than some defined threshold? - Specific treatment of unwanted images (e.g., detect and filter out wheels)

We show the code for comparing the accumulated probability mass over the CAR_CLASSES.

def is_car_acc_prob(predictions, thresh=THRESH, car_idx=CAR_IDX):

"""

Determine if car on image by accumulating probabilities of car prediction and comparing to threshold

Args:

predictions: (?, 1000) matrix of probability predictions resulting from ResNet with imagenet weights

thresh: threshold accumulative probability over which an image is considered a car

car_idx: indices corresponding to cars

Returns:

np.array of booleans describing if car or not

"""

predictions = np.array(predictions, dtype=float)

car_probs = predictions[:, car_idx]

car_probs_acc = car_probs.sum(axis=1)

return car_probs_acc > thresh

The higher the threshold is set, the stricter the filtering procedure is. A value for the threshold that provides good results is THRESH = 0.1. This ensures we do not lose too many true car images. The choice of an appropriate threshold remains subjective, so do as you feel.

The Colab notebook that uses the function is_car_acc_prob to filter the dataset is available in the github repository.

While tuning the prefiltering procedure, we observed the following:

- Many of the car images with light backgrounds were classified as “beach wagons”. We thus decided to also consider the “beach wagon” class in imagenet as one of the

CAR_CLASSES. - Images showing the front of a car are often assigned a high probability of “grille”, which is the grating at the front of a car used for cooling. This assignment is correct but leads the procedure shown above to not consider certain car images as cars since we did not include “grille” in the

CAR_CLASSES. This problem results in the trade-off of either leaving many close-up images of car grilles in the dataset or filtering out several car images. We opted for the second approach since it yields a cleaner car dataset.

After prefiltering the images using the suggested procedure, 53’738 of 64’467 initially remain in the dataset.

Overview of the Final Datasets

The prefiltered dataset contains images from 323 car models. We decided to reduce our attention to the top 300 most frequent classes in the dataset. That makes sense since some of the least frequent classes have less than ten representatives and can thus not be reasonably split into a train, validation, and test set. Reducing the dataset to images in the top 300 classes leaves us with a dataset containing 53’536 labeled images. The class occurrences are distributed as follows:

The number of images per class (car model) ranges from 24 to slightly below 500. We can see that the dataset is very imbalanced. It is essential to keep this in mind when training and evaluating the model.

Building Data Pipelines with tf.data

Even after prefiltering and reducing to the top 300 classes, we still have numerous images left. This poses a potential problem since we can not simply load all images into the memory of our GPU at once. To tackle this problem, we will use tf.data.

tf.data and especially the tf.data.Dataset API allows creating elegant and, at the same time, very efficient input pipelines. The API contains many general methods which can be applied to load and transform potentially large datasets. tf.data.Dataset is especifically useful when training models on GPU(s). It allows for data loading from the HDD, applies transformation on-the-fly, and creates batches that are than sent to the GPU. And this is all done in a way such as the GPU never has to wait for new data.

The following functions create a tf.data.Dataset instance for our particular problem:

def construct_ds(input_files: list,

batch_size: int,

classes: list,

label_type: str,

input_size: tuple = (212, 320),

prefetch_size: int = 10,

shuffle_size: int = 32,

shuffle: bool = True,

augment: bool = False):

"""

Function to construct a tf.data.Dataset set from list of files

Args:

input_files: list of files

batch_size: number of observations in batch

classes: list with all class labels

input_size: size of images (output size)

prefetch_size: buffer size (number of batches to prefetch)

shuffle_size: shuffle size (size of buffer to shuffle from)

shuffle: boolean specifying whether to shuffle dataset

augment: boolean if image augmentation should be applied

label_type: 'make' or 'model'

Returns:

buffered and prefetched tf.data.Dataset object with (image, label) tuple

"""

# Create tf.data.Dataset from list of files

ds = tf.data.Dataset.from_tensor_slices(input_files)

# Shuffle files

if shuffle:

ds = ds.shuffle(buffer_size=shuffle_size)

# Load image/labels

ds = ds.map(lambda x: parse_file(x, classes=classes, input_size=input_size, label_type=label_type))

# Image augmentation

if augment and tf.random.uniform((), minval=0, maxval=1, dtype=tf.dtypes.float32, seed=None, name=None) < 0.7:

ds = ds.map(image_augment)

# Batch and prefetch data

ds = ds.batch(batch_size=batch_size)

ds = ds.prefetch(buffer_size=prefetch_size)

return ds

We will now describe the methods in the tf.data we used:

from_tensor_slices()is one of the available methods for the creation of a dataset. The created dataset contains slices of the given tensor, in this case, the filenames.- Next, the

shuffle()method considersbuffer_sizeelements one at a time and shuffles these items in isolation from the rest of the dataset. If shuffling of the complete dataset is required,buffer_sizemust be larger than the bumber of entries in the dataset. Shuffling is only performed ifshuffle=True. map()allows to apply arbitrary functions to the dataset. We created a functionparse_file()that can be found in the github repo. It is responsible for reading and resizing the images, inferring the labels from the file name and encoding the labels using a one-hot encoder. If the augment flag is set, the data augmentation procedure is activated. Augmentation is only applied in 70% of the cases since it is beneficial to also train the model on non-modified images. The augmentation techniques used inimage_augmentare flipping, brightness, and contrast adjustments.- Finally, the

batch()method is used to group the dataset into batches ofbatch_sizeelements and theprefetch()method enables preparing later elements while the current element is being processed and thus improves performance. If used after a call tobatch(),prefetch_sizebatches are prefetched.

Model Fine Tuning

Having defined our input pipeline, we now turn towards the model training part. Below you can see the code that can be used to instantiate a model based on the pretrained ResNet, which is available in tf.keras.applications:

from tensorflow.keras.applications import ResNet50V2

from tensorflow.keras import Model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

class TransferModel:

def __init__(self, shape: tuple, classes: list):

"""

Class for transfer learning from ResNet

Args:

shape: Input shape as tuple (height, width, channels)

classes: List of class labels

"""

self.shape = shape

self.classes = classes

self.history = None

self.model = None

# Use pre-trained ResNet model

self.base_model = ResNet50V2(include_top=False,

input_shape=self.shape,

weights='imagenet')

# Allow parameter updates for all layers

self.base_model.trainable = True

# Add a new pooling layer on the original output

add_to_base = self.base_model.output

add_to_base = GlobalAveragePooling2D(data_format='channels_last', name='head_gap')(add_to_base)

# Add new output layer as head

new_output = Dense(len(self.classes), activation='softmax', name='head_pred')(add_to_base)

# Define model

self.model = Model(self.base_model.input, new_output)

A few more details on the code above:

- We first create an instance of class

tf.keras.applications.ResNet50V2. Withinclude_top=Falsewe tell the pretrained model to leave out the original head of the model (in this case designed for the classification of 1000 classes on ImageNet). base_model.trainable = Truemakes all layers trainable.- Using

tf.kerasfunctional API, we then stack a new pooling layer on top of the last convolution block of the original ResNet model. This is a necessary intermediate step before feeding the output to the final classification layer. - The final classification layer is then defined using

tf.keras.layers.Dense. We define the number of neurons to be equal to the number of desired classes. And the softmax activation function makes sure that the output is a pseudo probability in the range of(0,1].

The full version of TransferModel (see github) also contains the option to replace the base model with a VGG16 network, another standard CNN for image classification. In addition, it also allows to unfreeze only specific layers, meaning we can make the corresponding parameters trainable while leaving the others fixed. As a default, we have made all parameters trainable here.

After we defined the model, we need to configure it for training. This can be done using tf.keras.Model‘s compile()-method:

def compile(self, **kwargs):

"""

Compile method

"""

self.model.compile(**kwargs)

We then pass the following keyword arguments to our method:

loss = "categorical_crossentropy"for multi-class classification,optimizer = Adam(0.0001)for using the Adam optimizer fromtf.keras.optimizerswith a relatively small learning rate (more on the learning rate below), andmetrics = ["categorical_accuracy"]for training and validation monitoring.

Next, we will look at the training procedure. Therefore we define a train-method for our TransferModel-class introduced above:

from tensorflow.keras.callbacks import EarlyStopping

def train(self,

ds_train: tf.data.Dataset,

epochs: int,

ds_valid: tf.data.Dataset = None,

class_weights: np.array = None):

"""

Trains model in ds_train with for epochs rounds

Args:

ds_train: training data as tf.data.Dataset

epochs: number of epochs to train

ds_valid: optional validation data as tf.data.Dataset

class_weights: optional class weights to treat unbalanced classes

Returns

Training history from self.history

"""

# Define early stopping as callback

early_stopping = EarlyStopping(monitor='val_loss',

min_delta=0,

patience=12,

restore_best_weights=True)

callbacks = [early_stopping]

# Fitting

self.history = self.model.fit(ds_train,

epochs=epochs,

validation_data=ds_valid,

callbacks=callbacks,

class_weight=class_weights)