Preface

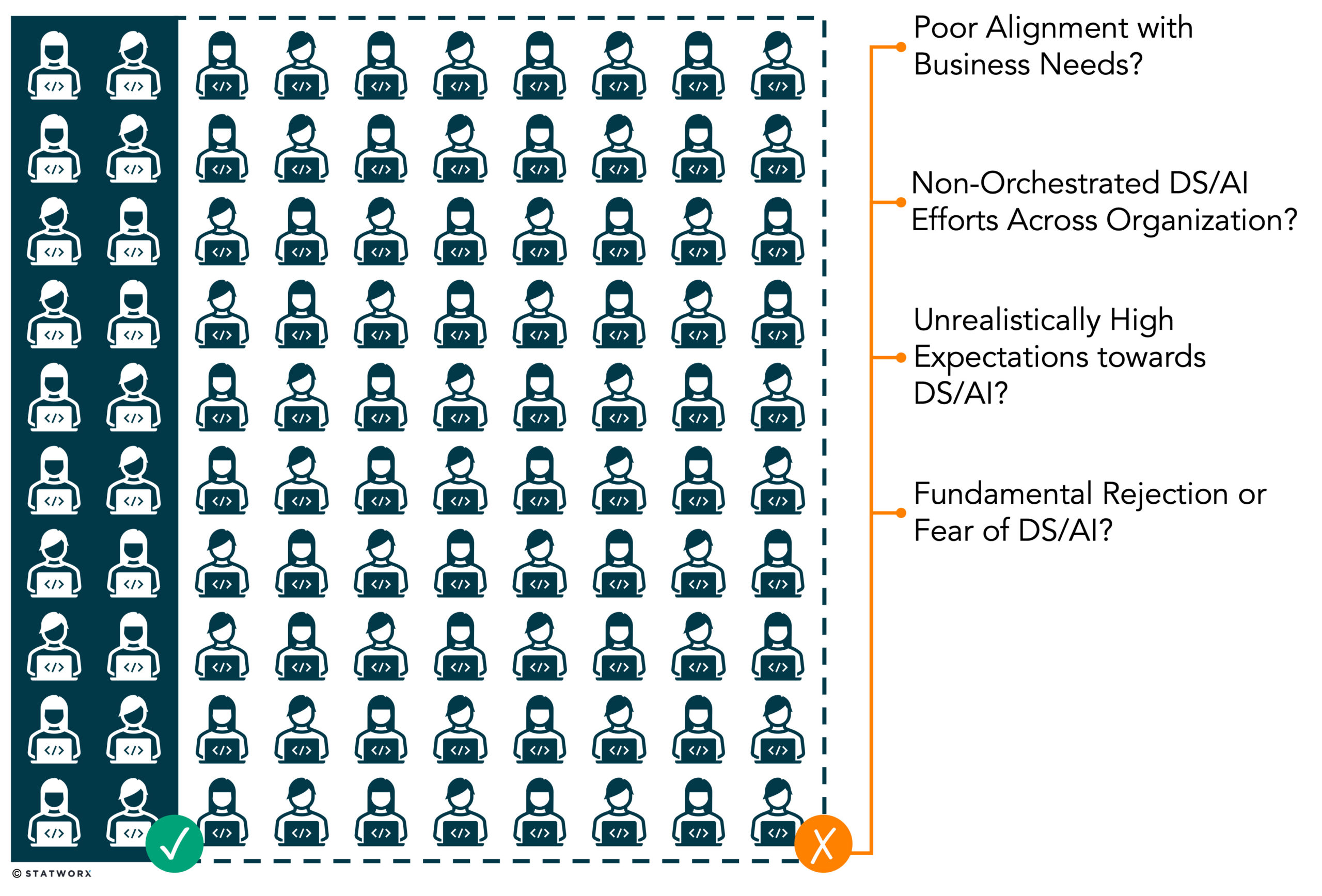

Every data science and AI professional out there will tell you: real-world data science (DS) & AI projects involve various challenges for which neither hands-on coding competitions nor theoretical lectures will prepare you. And sometimes – alarmingly often1, 2 – these real-live issues cause promising AI projects or whole AI initiatives to fail.

There has been an active discussion of the more technical pitfalls and potential solutions for quite some time now. The more known issues include, for example, siloed data, bad data quality, too inexperienced or under-staffed DS & AI teams, insufficient infrastructure for training and serving models. Another issue is that too many solutions are never moved into production due to organizational problems.

Only recently, the focus of the discourse has shifted more towards strategic issues. But in my opinion, these perspectives still do not get the attention they deserve.

That is why in this post, I want to share my take on the most important (non-technical) reasons why DS & AI initiatives fail. Of course, I’ll give you some input of how to solve these issues. I’m a Data & Strategy Consultant at statworx and I am sure this article is rather subjective. It reflects my personal experience of the problems and solutions I came across.

Issue #1: Poor Alignment of Project Scope and Actual Business Problem Issue #1

One problem that occurs way more often than one can imagine is the misalignment of developed data science & AI solutions and real business needs. The finished product might perfectly serve the exact task the DS & AI team set out to solve; however, the business users might look for a solution to a similar but significantly different task.

Too little exchange due to indirect communication channels or a lack of a shared language and frame of reference often leads to fundamental misunderstandings. The problem is that, quite ironically, only an extremely detailed, effective communication could uncover such subtle issues.

Including Too Few or Selective Perspectives Can Lead To a Rude Awakening

In other cases, individual sub-processes or the working methods of individual users differ a lot. Often, they vary so much that a solution that is a great benefit for one of the users/processes is hardly advantageous for all the others (while sometimes an option, the development of solution variants is by far less cost-efficient). If you are lucky, you will notice this at the beginning of a project when eliciting requirements. If you are unlucky, the rude awakening only occurs during broader user testing or even during roll-out, revealing that the users or experts who influenced the previous development did not provide universally generalizable input, making the developed tool not generally applicable.

How to Counteract This Problem:

- Conduct structured and in-depth requirements engineering. Invest the time to talk to as many experts/users as possible and try to make all implicit assumptions as explicit as possible. While requirements engineering stems from the waterfall paradigm, it can easily be adapted for agile development. The elicited requirements simply must not be understood as definite product features but as items for your initial backlog that are constantly up for (re)evaluation and (re)prioritization.

- Be sure to define success measures. Do so before the start of the project, ideally in the form of objectively quantifiable KPIs and benchmarks. This helps significantly to pin down the business problem/business value at the heart of the sought-after solution.

- Whenever possible and as fast as possible, create prototypes, mock-ups, or even Present these solution drafts to as many test users as possible. These methods strongly facilitate the elicitation of candid and precise user feedback to inform further development. Be sure to involve a user sample that is representative of the entirety of users.

Issue #2: Loss of Efficiency and Resources Due to Non-Orchestrated Data Science & AI Efforts Issue #2

Decentralized data science & AI teams often develop their use cases with little to no exchange or alignment between the teams’ current use cases and backlogs. This can cause different teams to accidentally and unnoticeably develop (parts of) the same (or very similar) solution.

In most cases, when such a situation is discovered, one of the redundant DS & AI solutions is discontinued or denied any future funding for further development or maintenance. Either way, the redundant development of use cases always results in direct waste of time and other resources with no or minimal added value.

The lack of alignment of an organization’s use case portfolio with the general business or AI strategy also can be problematic. This can cause high opportunity costs: Use cases that do not contribute to the general AI vision might unnecessarily bind valuable resources. Further, potential synergies between more strategically significant use cases might not be fully exploited. Lastly, competence building might happen in areas that are of little to no strategic significance.

How to Counteract This Problem:

- Communication is key. That is why there always should be a range of possibilities for the data science professionals within an organization to connect and exchange their lessons learned and best practices – especially for decentralized DS & AI teams. To make this work, it is essential to establish an overall working atmosphere of collaboration. The free sharing of successes and failures and thus internal diffusion of competence can only succeed without competitive thinking.

- Another option to mitigate the issue is to establish a central committee entrusted with the organization’s DS & AI use case portfolio management. The committee should include representatives of all (decentralized) DS & AI departments and general management. Together, the committee oversees the alignment of use cases and the AI strategy, preventing redundancies while fully exploiting synergies.

Issue #3: Unrealistically High Expectations of Success in Data Science & AI Issue #3

It may sound paradoxical, but over-optimism regarding the opportunities and capabilities of data science & AI can be detrimental to success. That is because overly optimistic expectations often result in underestimating the requirements, such as the time needed for development or the volume and quality of the required database.

At the same time, the expectations regarding model accuracy are often too high, with little to no understanding of model limitations and basic machine learning mechanics. This inexperience might prevent acknowledgment of many important facts, including but not limited to the following points: the inevitable extrapolation of historical patterns to the future; the fact that external paradigm shifts or shocks endanger the generalizability and performance of models; the complexity of harmonizing predictions of mathematically unrelated models; low naïve model interpretability or dynamics of model specifications due to retraining.

DS & AI simply is no magic bullet, and too high expectations can lead to enthusiasm turning into deep opposition. The initial expectations are almost inevitably unfulfilled and thus often give way to a profound and undifferentiated rejection of DS & AI. Subsequently, this can cause less attention-grabbing but beneficial use cases to no longer find support.

How to Counteract This Problem:

- When dealing with stakeholders, always try to convey realistic prospects in your communication. Make sure to use unambiguous messages and objective KPIs to manage expectations and address concerns as openly as possible.

- The education of stakeholders and management in the basics of machine learning and AI empowers them to make more realistic judgments and thus more sensible decisions. Technical in-depth knowledge is often unnecessary. Conceptual expertise with a relatively high level of abstraction is sufficient (and luckily much easier to attain).

- Finally, whenever possible, a PoC should be carried out before any full-fledged project. This makes it possible to gather empirical indications of the use case’s feasibility and helps in the realistic assessment of the anticipated performance measured by relevant (predefined!) KPIs. It is also important to take the results of such tests seriously. In the case of a negative prognosis, it should never simply be assumed that with more time and effort, all the problems of the PoC will disappear into thin air.

Issue #4: Resentment and Fundamental Rejection of Data Science & AI Issue #4

An invisible hurdle, but one that should never be underestimated, lies in the minds of people. This can hold true for the workforce as well as for management. Often, promising data science & AI solutions are thwarted due to deep-rooted but undifferentiated reservations. The right mindset is decisive.

Although everyone is talking about DS and AI, many organizations still lack real management commitment. Frequently, lip service is paid to DS & AI and substantial funds are invested, but reservations about AI remain.

This is often ostensibly justified by the inherent biases and uncertainty of AI models and their low direct interpretability. In addition, sometimes, there is a general unwillingness to accept insights that do not correspond with one’s intuition. The fact that human intuition is often subject to much more significant – and, in contrast to AI models, unquantifiable – biases is usually ignored.

Data Science & AI Solutions Need the Acceptance and Support of the Workforce

This leads to (decision-making) processes and organizational structures (e.g., roles, responsibilities) not being adapted in such a way that DS and AI solutions can deliver their (full) benefit. But this would be necessary because data science & AI is not just another software solution that seamlessly can be integrated into existing structures.

DS & AI is a disruptive technology that inevitably will reshape entire industries and organizations alike. Organizations rejecting this change are likely to fail in the long run, precisely because of this paradigm shift. The rejection of change begins with seemingly minor matters such as shifting from project management via the waterfall method towards agile, iterative development. Irrespective of the generally positive reception of certain change measures, there is sometimes a completely irrational refusal to reform current (still) functioning processes. Yet, this is exactly what would be necessary to be – admittedly only after a phase of re-adjustment – competitive in the long term.

While vision, strategy, and structures must be changed top-down, the day-to-day operational doing can only be revolutionized bottom-up, driven by the workforce. Management commitment and the best tool in the world are useless if the end-users are not able or willing to adopt it. General uncertainty about the long-term AI roadmap and the fear of being replaced by machines fuels fears that lead to DS & AI solutions not being integrated into everyday work. This is, of course, more than problematic, as only the (correct) application of AI solutions creates added value.

How to Counteract This Problem:

- Unsurprisingly, sound AI change management is the best approach to mitigate the anti-AI mindset. This should be an integral part of any DS & AI initiative and not only an afterthought, but responsibilities for this task should be assigned. Early, widespread, detailed, and clear communication is vital. Which steps will presumably be implemented, when, and how exactly? Remember that once trust has been lost, it is tough to regain it. Therefore, any uncertainties in the planning should be addressed. It is crucial to create a basic understanding of the matter among all stakeholders and clarify the necessity of change (e.g., otherwise endangered competitiveness, success stories, or competitors’ failures). In addition, dialogue with concerned parties is of great importance. Feedback should be sought early and acted upon where possible. Concerns should always be heard and respected, even if they cannot be addressed. However, false promises must be strictly avoided; instead, try to focus on the advantages of DS & AI.

- In addition to understanding the need for change, the fundamental ability to change is essential. The fear of the unknown or incomprehensible is inherent in us humans. Therefore, education – only on the level of abstraction and depth necessary for the respective role – can make a big difference. Appropriate training measures are not a one-time endeavor; the development of up-to-date knowledge and training in the field of DS & AI must be ensured in the long term. General data literacy of the workforce must be ensured as well as up- or re-skilling of technical experts. Employees must be given a realistic chance to gain new and more attractive job opportunities by educating themselves and engaging with DS & AI. The most probable outcome should never be to lose (parts of) their old jobs through DS & AI but must be perceived as an opportunity and not as a danger; DS and AI must create perspectives and not spoil them.

- Adopt or adapt the best practices of DS & AI leaders in terms of defining role and skill profiles, adjusting organizational structures, and value-creation processes. Battle-proven approaches can serve as a blueprint for reforming your organization and thereby ensure you remain competitive in the future.

Closing Remarks

As you might have noted, this blog post does not offer easy solutions. This is because the issues at hand are complex and multi-dimensional. This article gave you high-level ideas on how to mitigate the addressed problems, but it must be stressed that these issues call for a holistic solution approach. This requires a clear AI vision and a derived sound AI strategy according to which the vast number of necessary actions can be coordinated and directed.

That is why I must stress that we have long left the stage where experimental and unstructured data science & AI initiatives could be successful. DS & AI must not be treated as a technical topic that takes place solely in specialist departments. It is time to address AI as a strategic issue. Like the digital revolution, only organizations in which AI completely permeates and reforms daily operations, the general business strategy will be successful in the long term. As described above, this undoubtedly holds many pitfalls in-store but also represents an incredible opportunity.

If you are willing to integrate these changes but don’t know where to start, we at STATWORX are happy to help. Check out our website and learn more about our AI strategy offerings!

Quellen

[1] https://www.forbes.com/sites/forbestechcouncil/2020/10/14/why-do-most-ai-projects-fail/?sh=2f77da018aa3 [2] https://blogs.gartner.com/andrew_white/2019/01/03/our-top-data-and-analytics-predicts-for-2019/