It’s Christmas time at statworx: Christmas songs are playing during the lunch break, the office is festively decorated and the Christmas party was already a great success. But statworx wouldn’t be a consulting and development company in the area of data science, machine learning and AI if we didn’t bring our expertise and passion to our Christmas preparations.

Surrounded by the smell of freshly baked cookies and Christmas punch, we came up with the idea of developing an AI-based Christmas recipe generator that can complete and visualize texts of any kind using OpenAI models. With GPT-3, the description of a recipe is enough to generate a complete list of ingredients as well as cooking instructions. Afterwards, the complete text is passed as an image description to DALL-E 2, which visualizes it in a high-resolution image. Of course, the application of both models goes far beyond the entertainment factor in day-to-day work, but we believe that a playful get-to-know-you session with the models over cookies and mulled wine is the ideal approach.

Before we get creative together and test the Christmas recipe generator, we will first take a brief look at the models behind it in this blog post.

The leaves are falling but AI is blossoming with the help of GPT-3

The pace of development of large-language models and text-to-image models over the past two years has been breathtaking and swept all of our employees along with it. From avatars and internal memes to synthetic data on projects, not only the quality of the results has changed, but also the handling of the models. Where once there was performant code, statistical analysis, and lots of Greek letters floating around, now the operation of some models almost takes the form of an exchange or interaction. So-called prompts are created by means of texts or keyword prompts.

Fig. 1: Fancy a Christmas punch with a shot? The starting point for this image was the prompt “A rifle pointed at a Christmas mug”. By a lot of trial and error and mostly unexpectedly detailed prompts with many keywords, the results can be strongly influenced.

This form of interaction is partially due to the GPT-3 language model, which is based on a Deep Learning model. The arrival of this revolutionary language model not only represented a turning point for the research field of language modeling (NLP), but incidentally heralded a paradigm shift in AI development: prompt engineering.

While many areas of machine learning remain unaffected, for other areas it even meant the biggest breakthrough since the use of neural networks. As before, probability distributions are learned, target variables are predicted, or embeddings are used, i.e. a kind of compressed neuronal intermediate product, which are optimized for further processing and information content. For other use cases, mostly of a creative nature, it is now sufficient to specify the desired result in natural language and match it to the behavior of the models. More on prompt engineering can be found in this blog post.

In general, the ability of the so-called Transformer models to capture a sentence and its words as a dynamic context is one of the most important innovations. Nevertheless, one must pay attention! Words (in this case cooking and baking ingredients) can have different meanings in different recipes. And these relationships can now be captured by the model. In our first AI cooking experiments, this was not yet the case. When rigid Word2Vec models were used, beef broth or pureed tomatoes might be recommended instead of or along with red wine. Regardless of whether it was jelly for cookies or mulled wine, as the hearty use predominated in the training data!

Christmas image generation with DALL-E 2

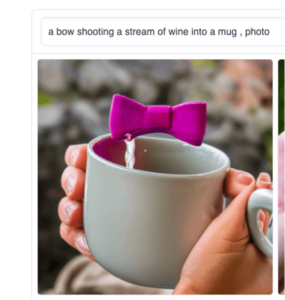

In our Christmas recipe generator, we use DALL-E 2 to then generate an image from the completed text. DALL-E 2 is a neural network that can generate high-resolution images based on text descriptions. There are no limitations – the images resulting from the creative word input make the impossible seem possible. However, misunderstandings often occur, as can be seen in some of the following examples.

Fig. 2: Experienced programmers will immediately recognize this: It’s not you, it’s me! Complex, pedantic, or simply logical… Programs usually show us errors or loose assumptions immediately.

Now getting acquainted with the model becomes even more important, because small changes in the prompt or certain keywords can strongly influence the result. Websites like PromptHero collect previous results including prompts (by the way, the experience values differ depending on the model) and give inspiration for high-resolution generated images.

Fig. 3: We wanted to test what was possible and generated cinnamon cookies and gingerbread hawaii with pineapple and ham. The results are still off the scale of taste and dignity.

And how would the model make a coffee at the Acropolis or in a Canadian Indian summer? Or a pun(s)ch with a kick?

Fig. 4: Packs quite the punch.

Complex technology, informal getting to know each other

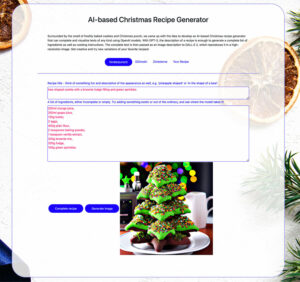

Enough theory and back to practice.

Now it’s time to test our Christmas recipe generator and have a cooking recipe recommended to you based on a recipe name and an incomplete list of desired ingredients. Creative names, descriptions and shapes are encouraged, unconventional ingredients are strictly desired and model surprising interpretations are almost pre-programmed.

Go to Christmas Recipe Generator

The GPT-3 model used for text completion is so diverse that all of Wikipedia doesn’t even make up 0.1% of the training data and there is no end in sight for possible new use cases. Just open our little WebApp to generate punch, cookie or any recipe and be amazed how far the developments in Natural Language Processing and Text-to-Image have come.

We wish you a lot of fun!